ALL, ARCHITECTURE

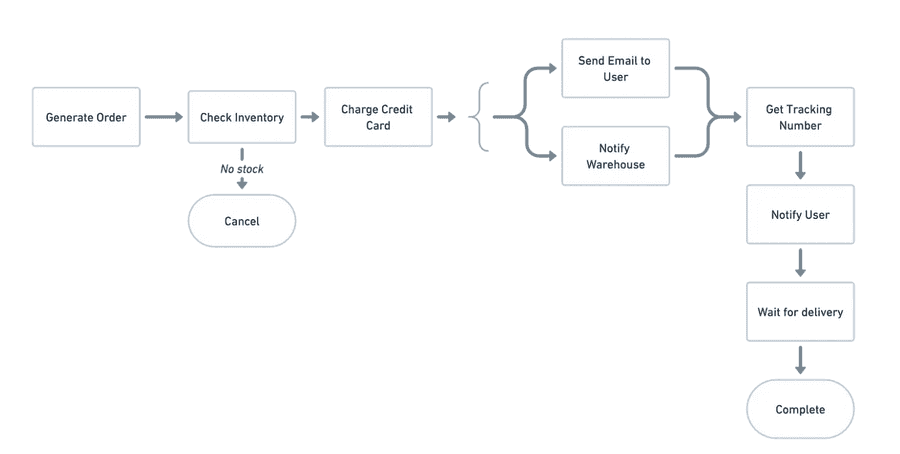

Upgrade EKS Clusters across Multiple Versions in Less Than a Day - using Automated Workflows

April 08, 2024

Upgrading your Kubernetes clusters to the latest version can be a time-consuming and laborious process, even with a managed Kubernetes…...