Business

Financial Services

Business Problem

Asynchronous workflows

Region

North America

Cloud Platforms

Overview

Summation is the first decision-grade AI platform that helps leaders make faster strategic decisions by surfacing real-time, audit-ready insights at scale. They work with data rich businesses that need to ingest operational and financial data, model it using SQL, and generate automated, AI assisted analysis that helps CFO teams move faster on planning, reporting, and decision making

As the platform matured and customer usage increased, Summation faced a critical architectural challenge. The team needed a robust way to orchestrate long running, compute intensive, asynchronous workflows across data ingestion, modeling, and downstream analytics. That need led them to Orkes.

The Need for Asynchronous Workflows

As Summation built its platform, including backend services and a modeling layer on top of ingested data, the team realized they needed asynchronous architecture as a foundation. Their workloads are data heavy and require orchestration that supports both technical workflows and an agentic execution layer.

Technical workflows

Summation ingests customer data in daily batches. Each ingestion triggers long running, compute intensive SQL model rebuilds, including aggregations, quarterly breakouts, pivoting, and other transformations. These steps cannot be chained synchronously and need to run as background jobs in a defined sequence.

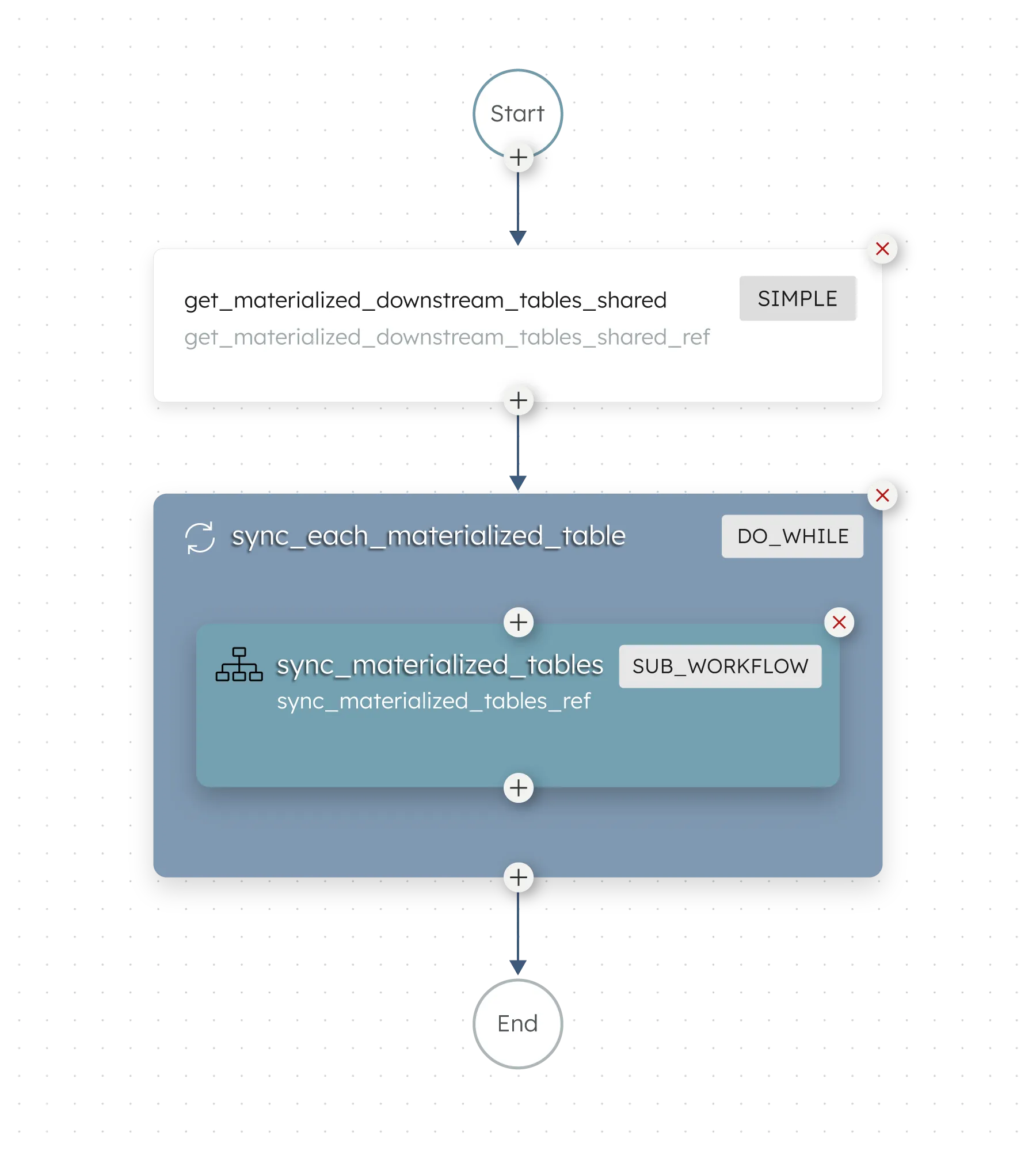

After each daily batch lands (see below workflow), Summation computes a lineage graph to map dependencies across models and tables. With lineage established, it materializes models in dependency order, moving level by level through the graph. Where dependencies allow, models at the same level run in parallel, and downstream models start only after prerequisites complete. Results are cached or materialized to keep analytics and dashboards performant.

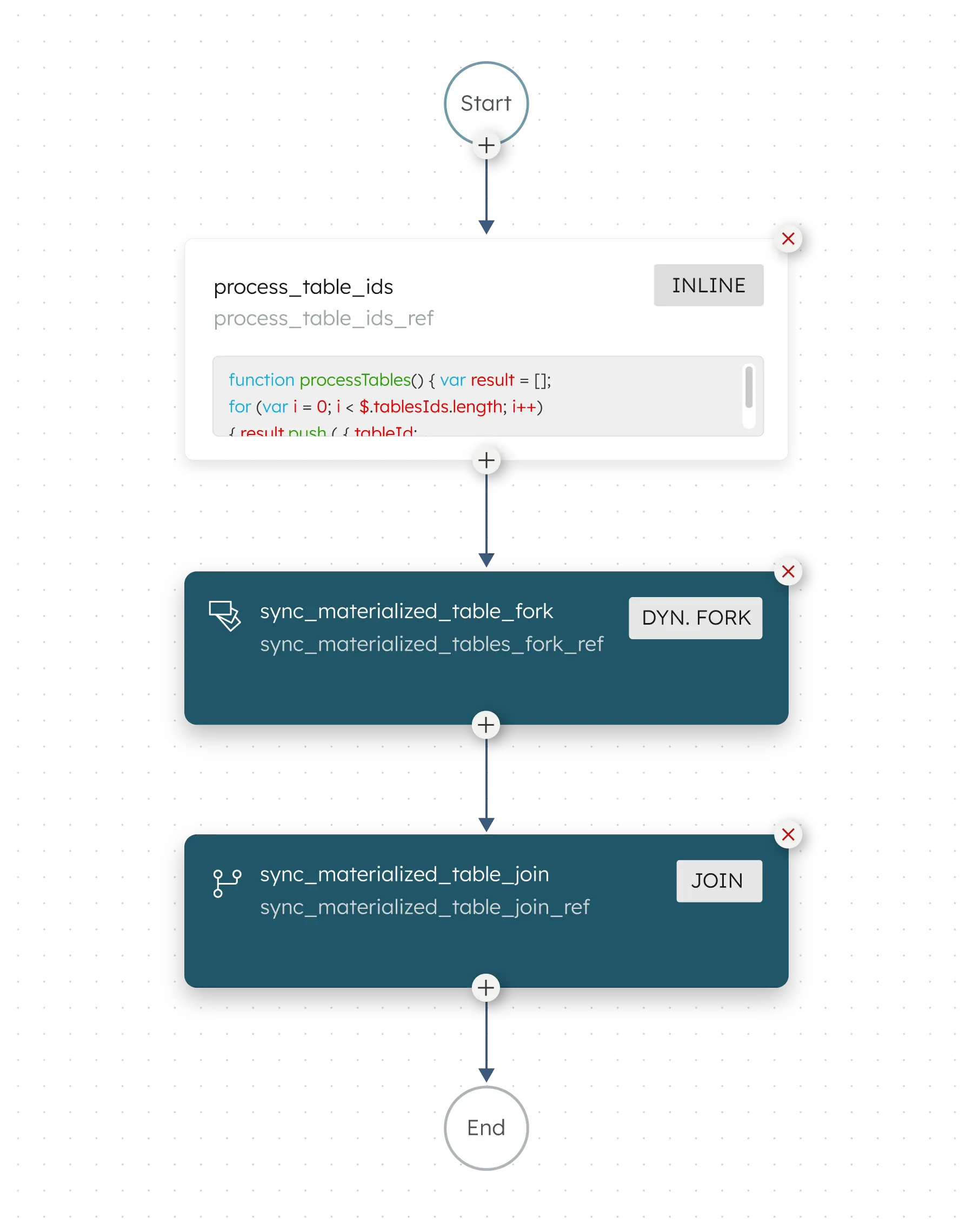

Because lineage is recomputed every run, Summation uses dynamic forking to fan out the right number of materialization tasks at runtime, then joins them before advancing to the next level. To run this safely at scale, they needed orchestration support for multi step workflows, dynamic forking and joining, parallel execution, retries, queuing, and the ability to stop a workflow mid run when downstream work is no longer valid.

The above visual is that of the Summation parent workflow that processes downstream tables based on lineage and materializes each one by invoking a subworkflow detailed below. Orkes provides operational guardrails for the task execution layer. Summation uses it to enforce global limits on concurrent workers and to control access to shared data using rateLimitKeys. This ensures that each workflow step does not write on the same table simultaneously and helps maintain system stability during heavy analytical workloads. This example uses dynamic forking, where the number of parallel executions is determined at runtime based on the table IDs provided as input to the workflow.

"Dynamic forking and the flexibility around timeouts and retries were key for us. Our workflows are straightforward in steps, but they require control and resilience inside each step, and Orkes fits that need well"

– Hema Nagarajan, Director of Engineering at Summation

Agentic execution layer

Beyond data pipelines, Summation uses Orkes to power an multi-agent workflow process for scheduled analysis and insight generation. For example, quarterly revenue analysis is triggered using Orkes scheduling and executed as a structured, multi stage process where sub-agent workflows hand-off from one stage to the next.

Orkes acts as the orchestration backbone for agent execution, sequencing stages, coordinating handoffs, and ensuring outputs from one step feed the next. Once a scheduled workflow starts, workers issue database queries, assemble the required context, and route results into successive agent stages such as retrieval and validation.

Kafka Integration

Kafka is the eventing backbone of Summation's platform. Backend services emit events when upstream data or models change, signaling that downstream processing needs to run.

Orkes consumes these Kafka events to trigger the right workflows automatically, decoupling event production from workflow execution. When new data arrives or a model is updated, Orkes orchestrates lineage recomputation, model rebuilds, and downstream refreshes so changes propagate reliably across environments and clusters.

Developer Experience

Workflow as Code

Summation uses the Orkes UI for quick workflow building and testing, but treats production workflows as code. Workflows are authored in JSON, committed to source control, and deployed programmatically into different environments using scripts. This workflow as code approach keeps changes versioned, repeatable, and aligned with their engineering practices.

Fast Onboarding and Extensibility

Onboarding new developers has been straightforward. Summation built a reusable worker framework where each service includes a base worker class already integrated with Orkes. Engineers extend the base class to add new workflows, which makes it possible for new team members to start building and shipping workflows within about a week.

Observability and Monitoring

Summation integrated Orkes with Datadog for monitoring and alerting. Workflow events are forwarded into Datadog, and failures trigger Slack alerts so the on-call engineer gets notified immediately. These alerts link directly to the failed execution in Orkes, making it easy to jump from detection to investigation.

Once in the Orkes UI, engineers can quickly identify the failed task within the workflow execution and drill into logs to troubleshoot. This shortens time to triage and helps the team respond quickly when failures impact SLAs.

Selecting an Orchestration Platform

Summation evaluated multiple workflow orchestration options and evaluated vendors like Temporal.

Key evaluation criteria included:

- Native Kafka integration for event driven workflows

- Support for dynamic workflows and dynamic forking

- Ability to manage retries, timeouts, and failure handling

- Workflow visibility and monitoring

- Tenant isolation at an architectural level

- Flexibility to orchestrate technical workflows at scale

Orkes stood out due to its Kafka integration, workflow flexibility, and ease of use. Pricing and early engagement with the Orkes team also factored into the final decision.

Outcomes

While Summation does not track formal productivity metrics, the impact of orchestration is clear:

- Faster recovery and troubleshooting during failures

- Easier onboarding for new engineers

- Safer handling of concurrency and long running jobs

- Reliable propagation of data changes across complex dependencies

The platform now supports asynchronous execution as a first class capability rather than an afterthought.

Looking Ahead

Today, all Orkes workflows at Summation are technical in nature. In the future, the team expects to expand into more business driven workflows, potentially allowing finance users to define approval flows, validations, and reporting pipelines.

As the platform evolves, orchestration will remain a foundational component of Summation's architecture.