Mistral Integration with Orkes Conductor

To use system AI tasks in Orkes Conductor, you must integrate your Conductor cluster with the necessary AI/LLM providers. This guide explains how to integrate Mistral with Orkes Conductor. Here’s an overview:

- Get the required credentials from Mistral.

- Configure a new Mistral integration in Orkes Conductor.

- Add models to the integration.

- Set access limits to the AI model to govern which applications or groups can use it.

Step 1: Get the Mistral credentials

To integrate Mistral with Orkes Conductor, retrieve the API key and endpoint from the Mistral console.

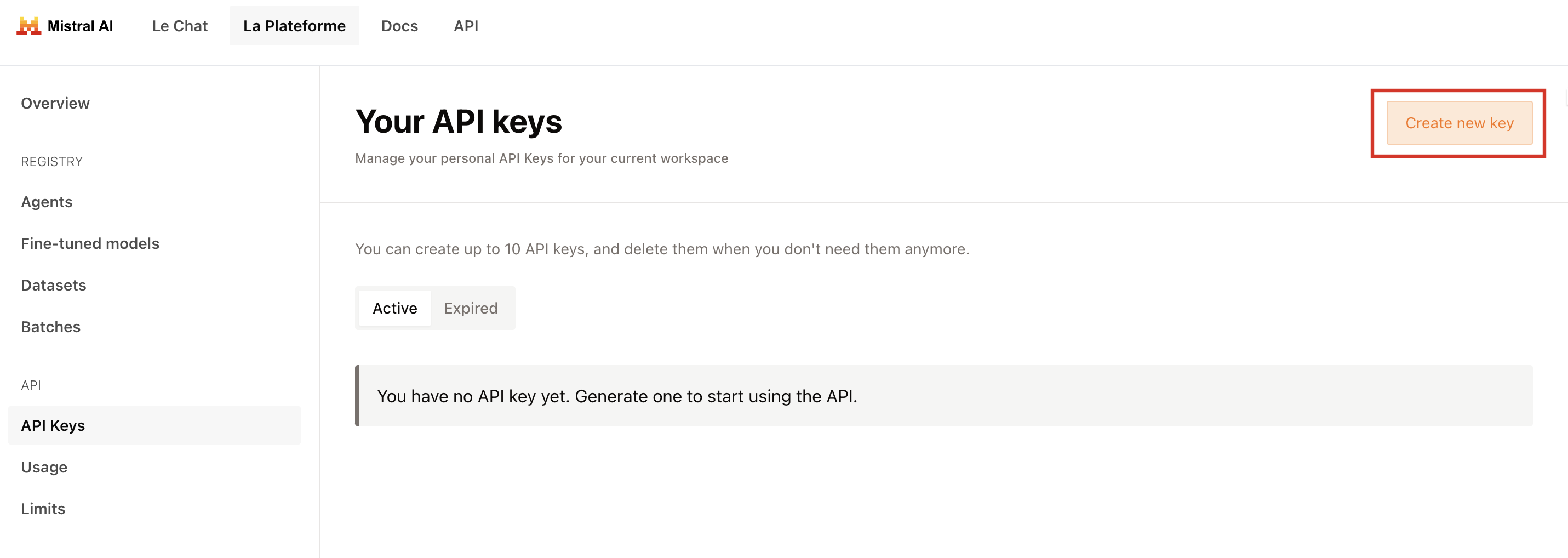

To get the API key:

- Sign in to the Mistral console.

- Go to API Keys > Create new key.

- Enter a Key name.

- (Optional) Set an Expiration for the key.

- Select Create new key.

- Copy and store the generated key.

The default API endpoint for Mistral is https://api.mistral.ai/v1. Use this as the API endpoint when configuring the integration.

Step 2: Add an integration for Mistral

After obtaining the credentials, add a Mistral integration to your Conductor cluster.

To create a Mistral integration:

- Go to Integrations from the left navigation menu on your Conductor cluster.

- Select + New integration.

- In the AI/LLM section, choose Mistral.

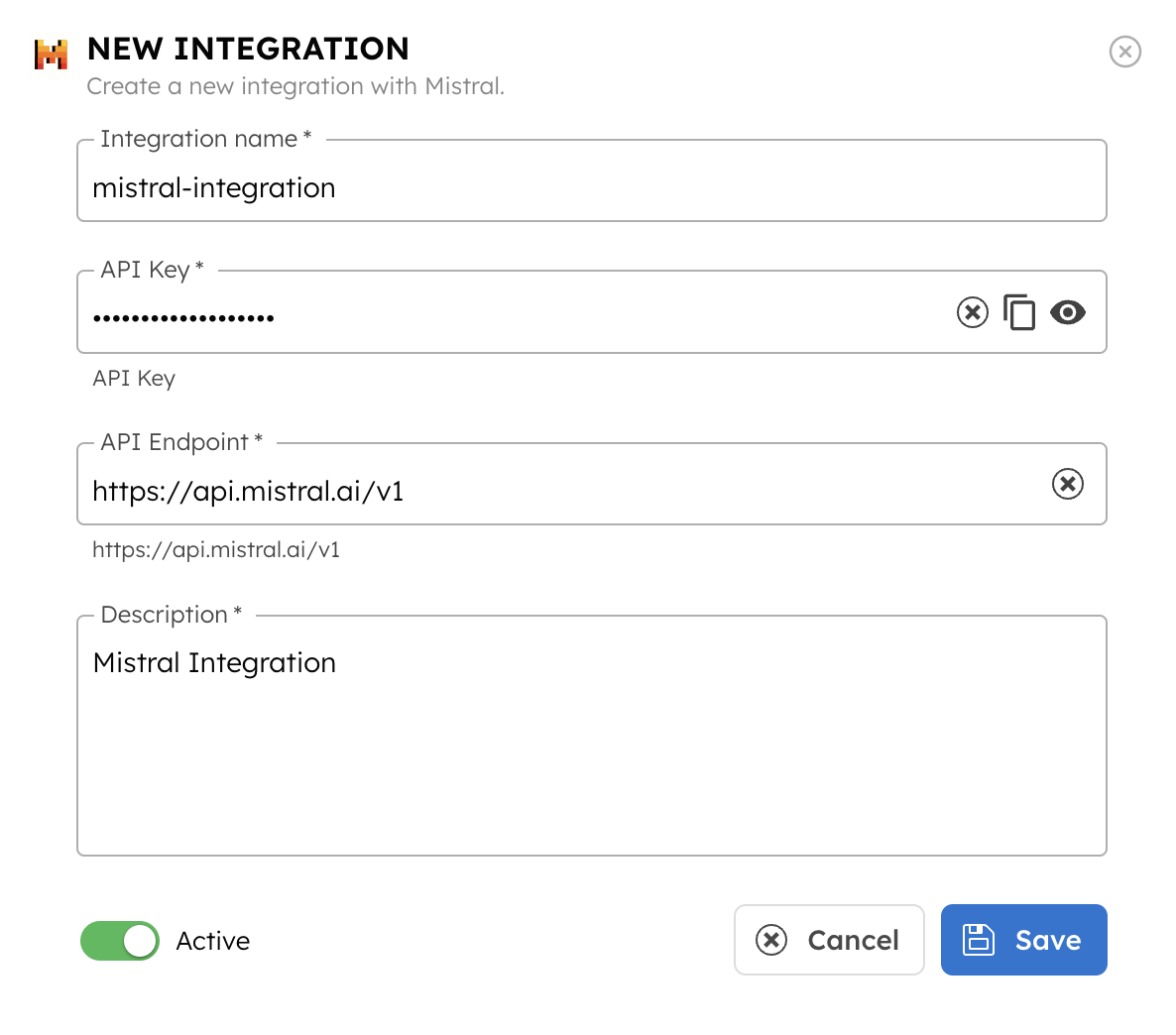

- Select + Add and enter the following parameters:

| Parameters | Description |

|---|---|

| Integration name | A name for the integration. |

| API Key | The API key copied previously from the Mistral console. |

| API Endpoint | The default API endpoint for Mistral, which is https://api.mistral.ai/v1. |

| Description | A description of the integration. |

- (Optional) Toggle the Active button off if you don’t want to activate the integration instantly.

- Select Save.

Step 3: Add Mistral models

Once you’ve integrated Mistral, the next step is to configure specific models. Mistral has different models, each designed for various use cases. Choose the model that best fits your use case.

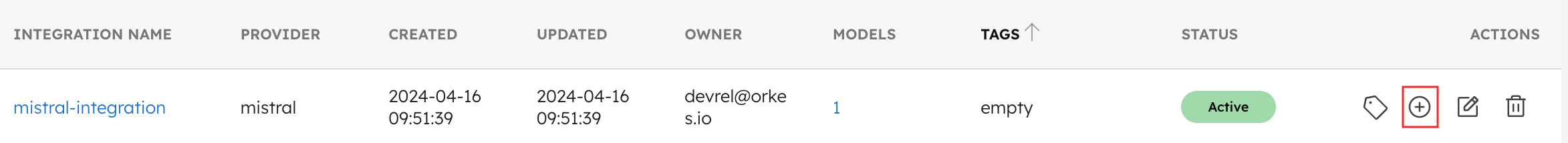

To add a model to the Mistral integration:

- Go to Integrations and select the + button next to the integration created.

- Select + New model.

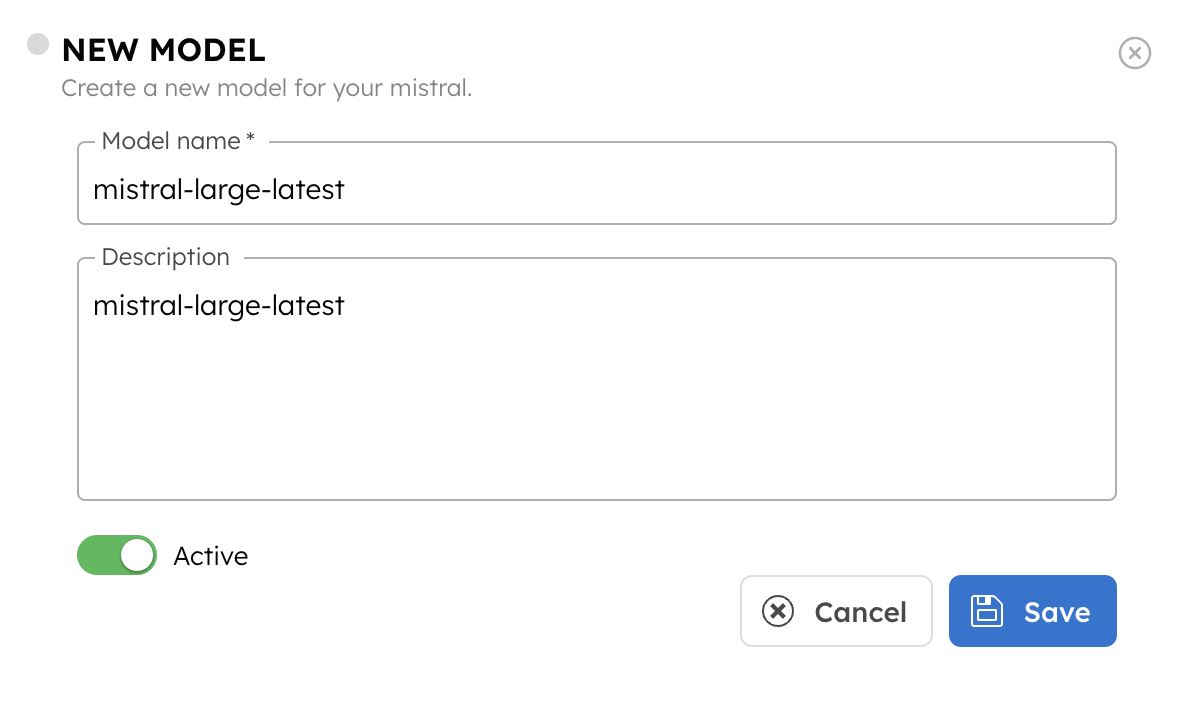

- Enter the Model name. The name must exactly match the Mistral model name. For a complete list, see the Mistral documentation.

- Provide a Description.

- (Optional) Toggle the Active button off if you don’t want to activate the model instantly.

- Select Save.

This saves the model for future use in AI tasks within Orkes Conductor.

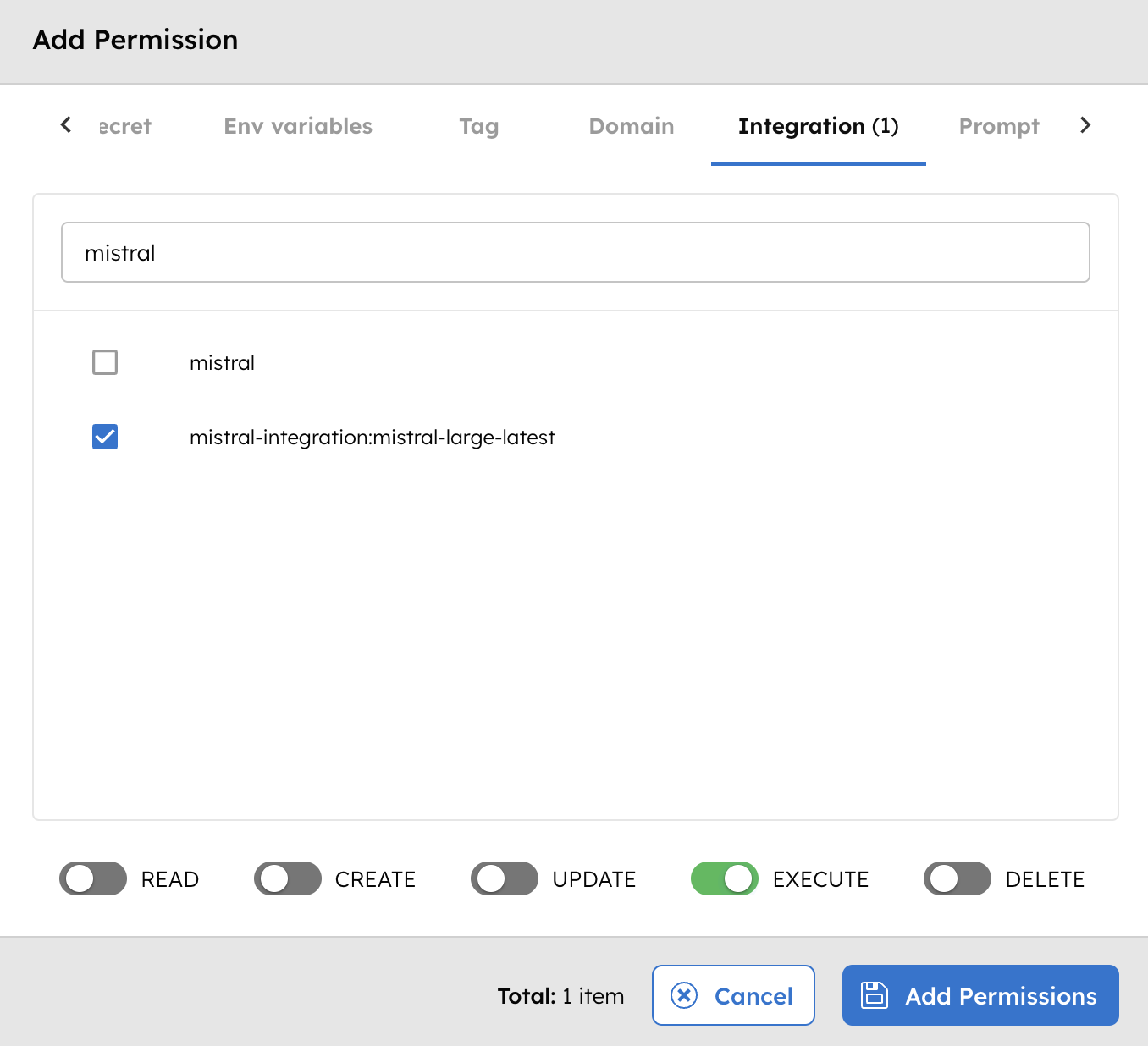

Step 4: Set access limits to integration

Once the integration is configured, set access controls to manage which applications or groups can use the models.

To provide access to an application or group:

- Go to Access Control > Applications or Groups from the left navigation menu on your Conductor cluster.

- Create a new group/application or select an existing one.

- In the Permissions section, select + Add Permission.

- In the Integration tab, select the required AI models and toggle the necessary permissions.

- Select Add Permissions.

The group or application can now access the AI model according to the configured permissions.

With the integration in place, you can now create workflows using AI/LLM tasks.