Using AI Prompts

To effectively use AI tasks in workflows, you must provide clear and structured instructions called prompts that define the model’s behavior and output. Orkes Conductor allows you to create, manage, and securely share AI prompts within your organization.

In Orkes Conductor, AI prompts are reusable and can include variables, allowing you to customize the input to AI models based on runtime data. This approach acts like parameterized API calls, where variables in the prompt are replaced with actual values during workflow execution. It ensures flexibility, reusability, and consistency when integrating AI models into complex workflows.

In this guide, you will learn how to create, test, and use AI prompts in your workflows.

Create AI prompts

- Integrate the required AI/LLM provider with Orkes Conductor. Refer to the Integration Guides for detailed steps.

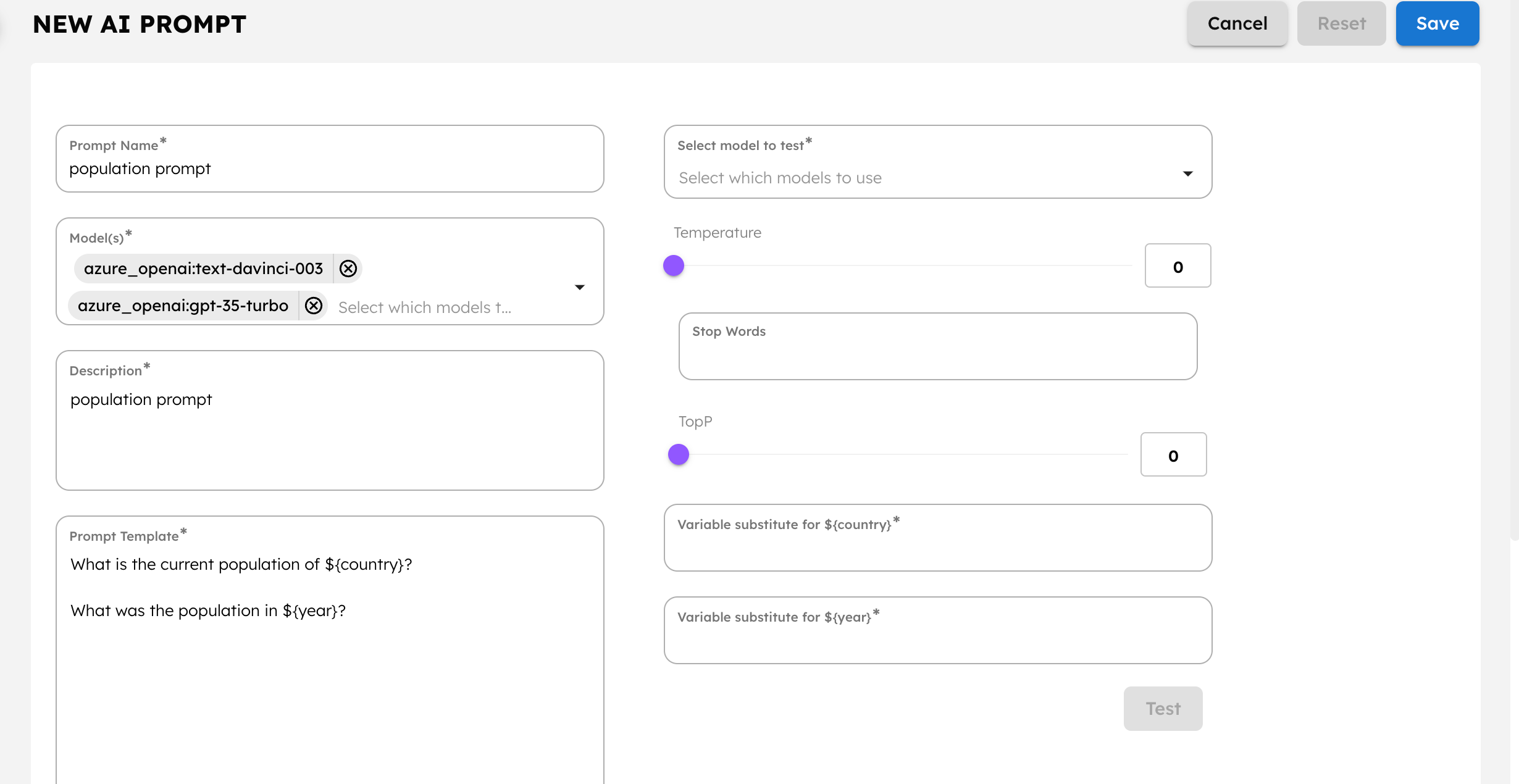

To create an AI prompt:

- Go to Definitions > AI Prompts from the left navigation menu on your Conductor cluster.

- Select + Add AI prompt.

- Enter the following details:

| Parameter | Description |

|---|---|

| Prompt Name | A name for the prompt. |

| Model | Select the AI models to use with this prompt. Note Only models added here can generate responses based on this prompt in a workflow. |

| Description | A description for the prompt. |

| Prompt Template | The prompt, which can be formulated as context, instructions, or questions. The prompt can also contain variables, ${someVariable}, which are replaced with actual values at runtime before it is sent to the AI model.Example “What is the current population of ${country}? What was the population in ${year}?”In the above prompt, ${country} and ${year} are variables that can be dynamically set based on the runtime data. |

- Select Save > Confirm Save.

Here’s the JSON definition for an AI prompt:

{

"name": "population_prompt",

"integrations": [

"<YOUR-AI-PROVIDER>:<YOUR-AI-MODEL>"

],

"description": "A prompt to retrieve the population of countries for specific years.",

"template": "What is the current population of ${country}?\n\nWhat was the population in ${year}?"

}

Manage AI prompt versions

- 5.2.0 and later

You can create and manage multiple versions of an AI prompt. By default, versioning starts at 1 and increments with each new version.

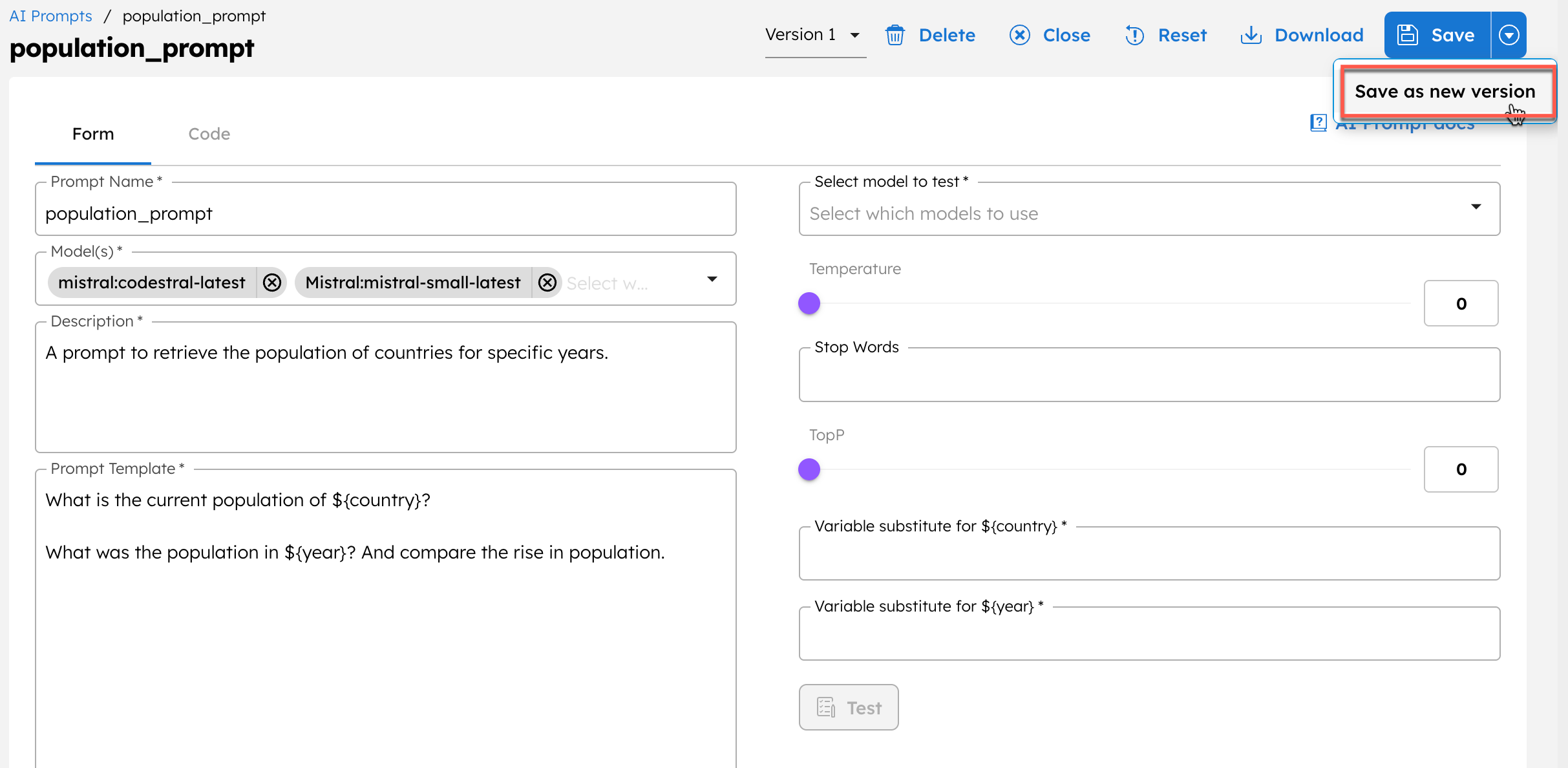

To create a new version:

After making changes to the AI prompt definition in the Conductor UI, select the ⏷ (down arrow) icon beside Save > Save as new version to create a new version.

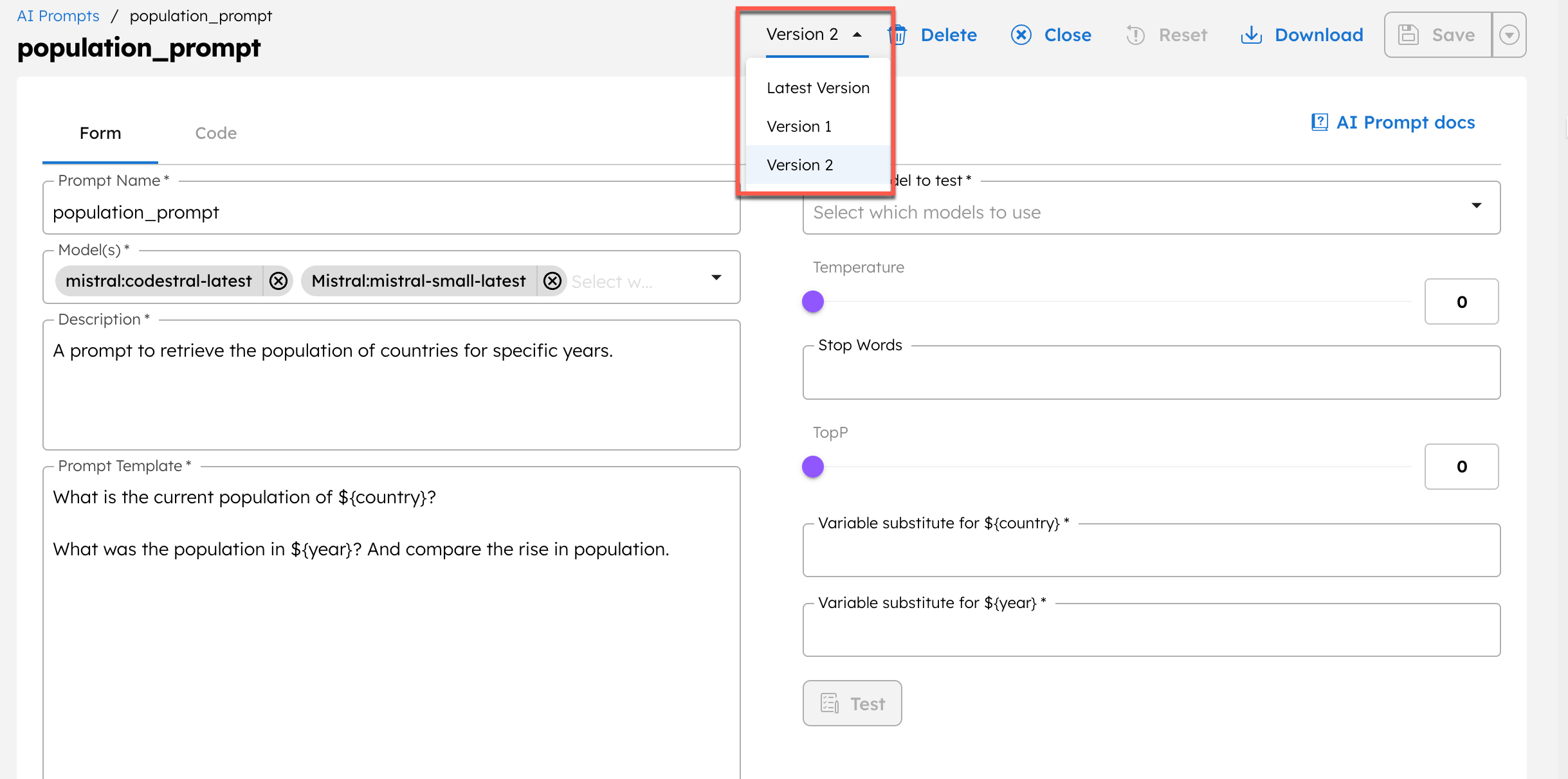

To view available versions:

- In the AI prompt editor, select the Version dropdown and choose the required version.

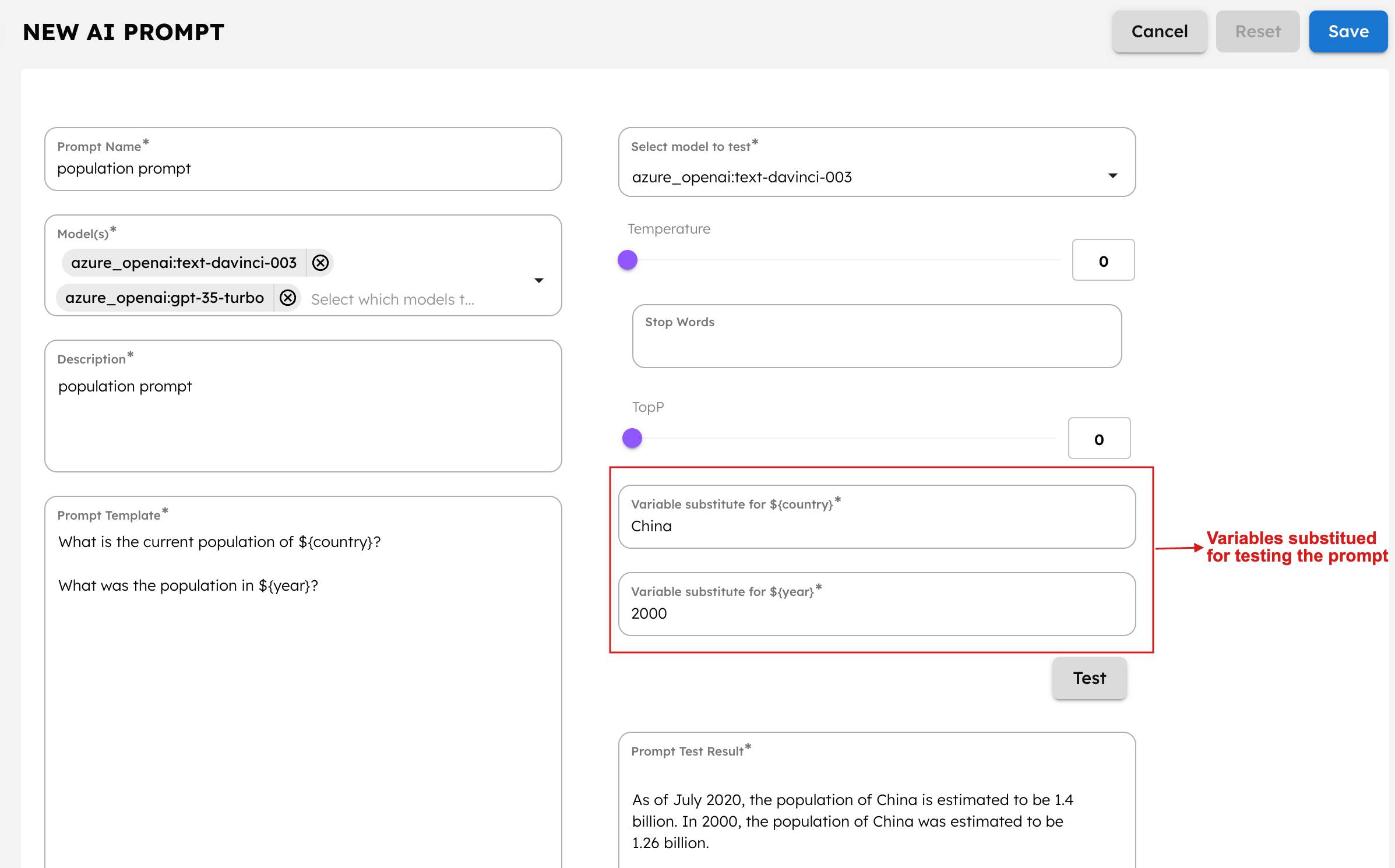

Test AI prompts

After creating an AI prompt, test it to ensure it produces the expected results.

Test parameters

In the AI prompt’s testing interface, define the following parameters:

| Parameter | Description |

|---|---|

| Select model to test | Select an AI model for testing. Only the models added to the prompt configuration get listed here. |

| Temperature | Set the temperature based on your requirements. Temperature is a parameter that controls the randomness of the model’s output.

|

| Stop words | Enter the stop words to be filtered out or given less importance during the text generation process. This ensures the generated text is coherent and contextually relevant. Stop words tend to be common words like “and”, “a”, “the”, etc., that are necessary for sentence structure but do not contribute significant meaning. |

| TopP | Set the topP parameter based on your requirements. TopP is a parameter to control the randomness of the model’s output. This parameter defines a probability threshold and then chooses tokens whose cumulative probability exceeds this threshold. |

Example of using the TopP parameter

Suppose you want to complete the sentence: “She walked into the room and saw a __.” The model considers the following top four words based on their probabilities:

- Cat - 35%

- Dog - 25%

- Book - 15%

- Chair - 10%

If you set the topP parameter to 0.70, the model selects tokens until their cumulative probability reaches or exceeds 70%. Here's how it works:

- Adding "Cat" (35%) to the cumulative probability.

- Adding "Dog" (25%) to the cumulative probability, totaling 60%.

- Adding "Book" (15%) to the cumulative probability, now at 75%.

At this point, the cumulative probability is 75%, exceeding the specified topP value of 70%. Therefore, the model will randomly select one of the tokens from the list of "Cat," "Dog," and "Book" to complete the sentence because these tokens collectively account for approximately 75% of the probability.

To test an AI prompt:

- Define the variables in the testing interface.

- Select Test.

- Refine the prompt and test it again. When the results align with your expectations, save the prompt.

The AI prompt is now ready for use in workflows. Next, provide access to the prompt for the required groups or applications.

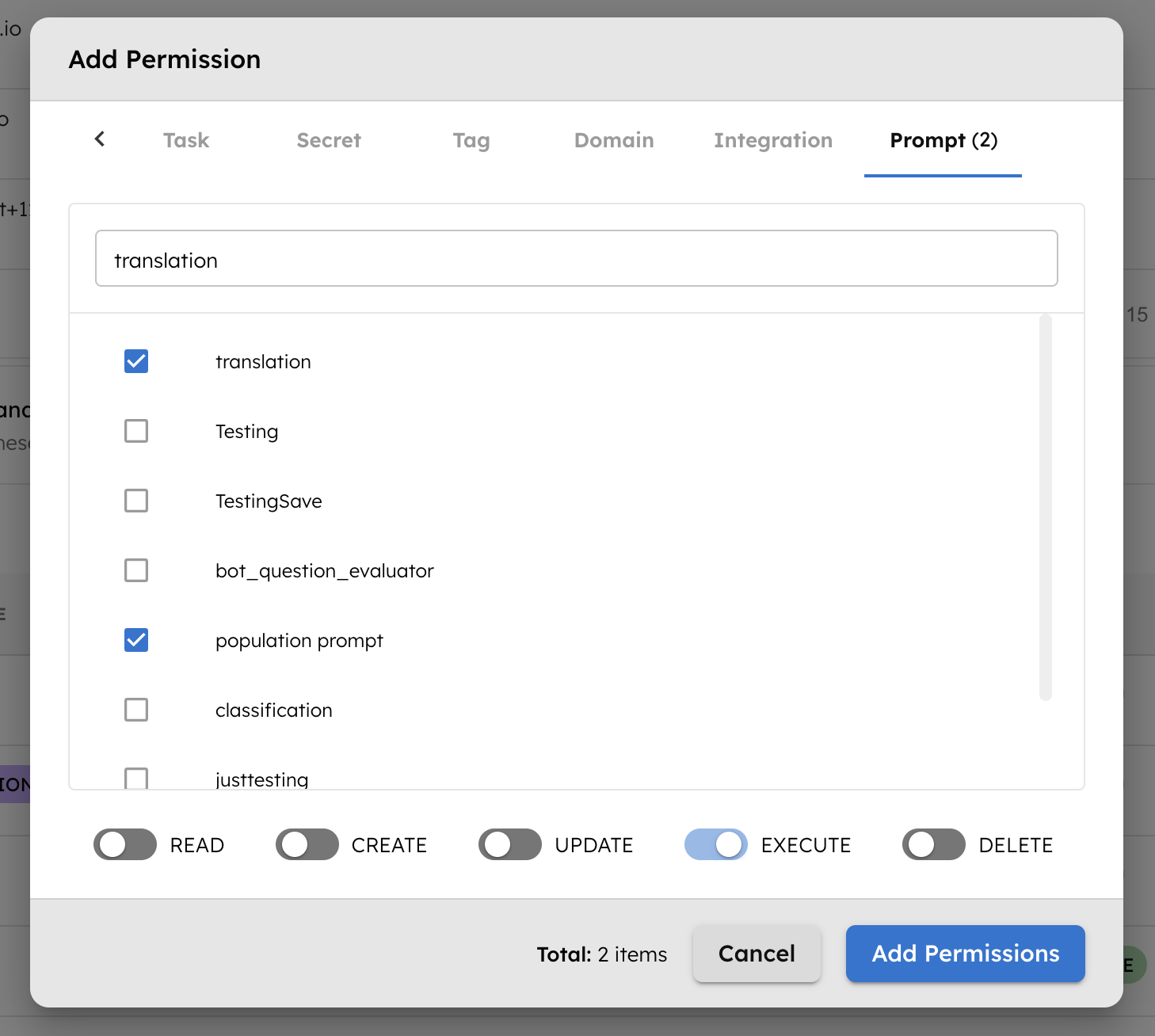

Set access limits to AI prompts

In your Orkes Conductor cluster, you can assign specific permissions to various applications or groups to access different resources, including AI prompts. This lets you control which teams or applications can use a particular prompt in their workflows.

Users or applications must also have access to the associated AI models used in the prompt.

To provide access to an application or group:

- Go to Access Control > Applications or Groups from the left menu on your Orkes Conductor cluster.

- Create a new group/application or select an existing one.

- In the Permissions section, select + Add permission.

- In the Prompt tab, select the required prompt and toggle the necessary permissions.

- Select Add Permissions.

The group or application can now access the prompt according to the configured permissions.

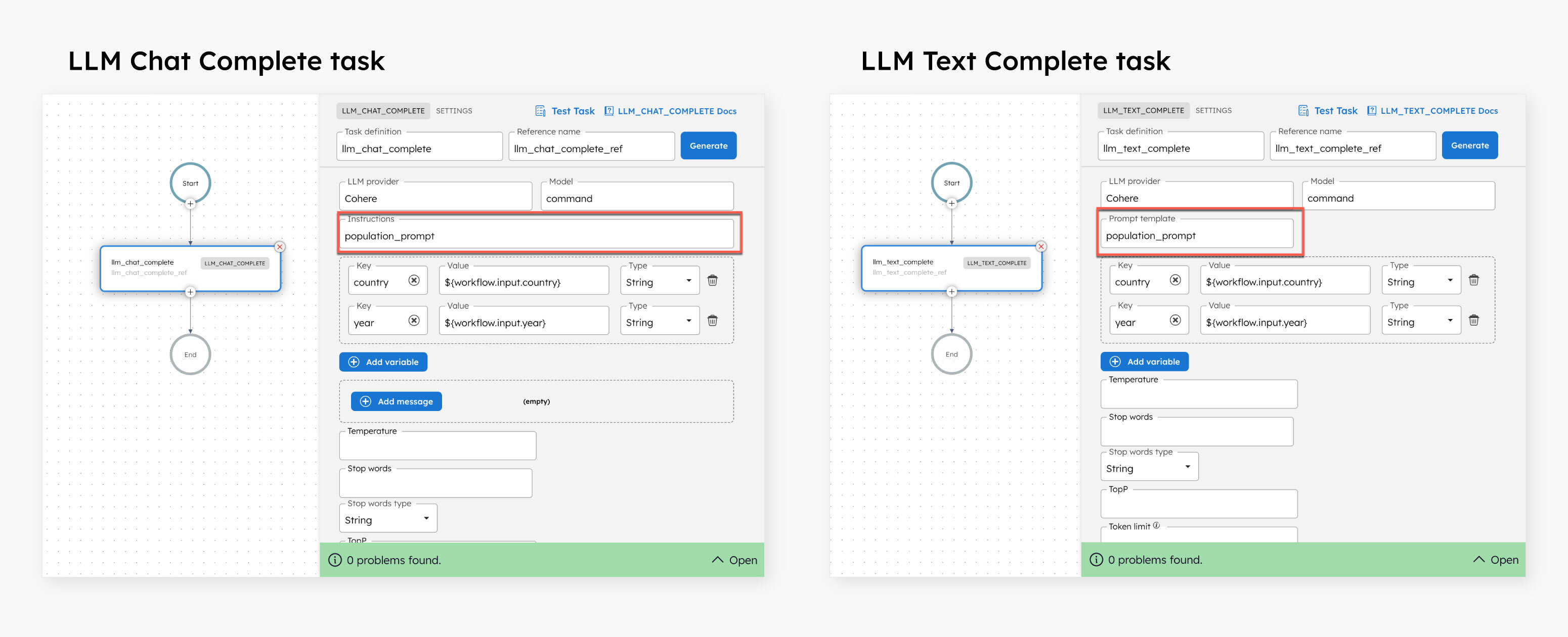

Use AI prompts in workflows

AI prompts can be used in workflows with the following LLM tasks:

To use AI prompts in workflows:

- Go to Definitions > Workflow from the left navigation menu on your Orkes Conductor cluster.

- Select + Define workflow.

- In the visual workflow builder, select Start and add the LLM Text Complete/LLM Chat Complete task.

- Select the required AI provider and model. Ensure it matches the models associated with the prompt.

- Select the created AI prompt, in the Prompt Name field.

- If the prompt contains variables, map them to corresponding variables in the workflow. For example:

//workflow definition

"promptVariables": {

"input": "${workflow.input.input}",

"language": "${workflow.input.language}"

}

- Set the Temperature, Stop words, Stop words Type, TopP, and Token Limit.

- Select Save > Confirm.

Examples

Agentic research assistant

Check out the quickstart template on Developer Edition for building an agentic research assistant that uses multiple prompts.

AI tutorials

Explore the AI tutorials section for step-by-step, end-to-end examples that use different AI tasks and integrations.