LLM Chat Complete

The LLM Chat Complete task is used to complete a chat query based on additional instructions. It can be used to govern the model's behavior to minimize deviation from the intended objective.

The LLM Chat Complete task processes a chat query by taking the user's input and generating a response based on the supplied instructions and parameters. This helps the model to stay focused on the objective and provides control over the model's output behavior.

- Integrate the required AI model with Orkes Conductor.

- Create the required AI prompt for the task.

Task parameters

Configure these parameters for the LLM Chat Complete task.

| Parameter | Description | Required/ Optional |

|---|---|---|

| inputParameters. llmProvider | The integration name of the LLM provider integrated with your Conductor cluster. Note: If you haven’t configured your AI/LLM provider on your Orkes Conductor cluster, go to the Integrations tab and configure your required provider. | Required. |

| inputParameters. model | The available language models within the selected LLM provider. For example, If your LLM provider is Azure Open AI and you’ve configured text-davinci-003 as the language model, you can select it here. | Required. |

| inputParameters. instructions | The ground rules or instructions for the chat so the model responds to only specific queries and will not deviate from the objective. Select the instructions saved as an AI prompt in Orkes Conductor and add it here. Note: If you haven’t created an AI prompt for your language model, refer to the documentation on creating AI Prompts in Orkes Conductor. | Optional. |

| inputParameters. promptVariables | For prompts that involve variables, provide the input to these variables within this field. It can be string, number, boolean, null, object/array. | Optional. |

| inputParameters. messages | The appropriate role and messages to complete the chat query. Supported values:

| Optional. |

| inputParameters. messages.role | The required role for the chat completion. Available options include user, assistant, system, or human.

| Optional. |

| inputParameters. messages.message | The corresponding input message to be provided. It can also be passed as a dynamic input. | Optional. |

| inputParameters. temperature | A parameter to control the randomness of the model’s output. Higher temperatures, such as 1.0, make the output more random and creative. A lower value makes the output more deterministic and focused. Tip: If you're using a text blurb as input and want to categorize it based on its content type, opt for a lower temperature setting. Conversely, if you're providing text inputs and intend to generate content like emails or blogs, it's advisable to use a higher temperature setting. | Optional. |

| inputParameters. stopWords | List of words to be omitted during text generation. Supports string and object/array. In LLM, stop words may be filtered out or given less importance during the text generation process to ensure that the generated text is coherent and contextually relevant. | Optional. |

| inputParameters. topP | Another parameter to control the randomness of the model’s output. This parameter defines a probability threshold and then chooses tokens whose cumulative probability exceeds this threshold. Example: Imagine you want to complete the sentence: “She walked into the room and saw a __.” The top few words the LLM model would consider based on the highest probabilities would be:

| Optional. |

| inputParameters. maxTokens | The maximum number of tokens to be generated by the LLM and returned as part of the result. A token is approximately four characters. | Optional. |

| inputParameters. jsonOutput | Determines whether the LLM’s response is to be parsed as JSON. When set to ‘true’, the model’s response will be processed as structured JSON data. | Optional. |

The following are generic configuration parameters that can be applied to the task and are not specific to the LLM Chat Complete task.

Caching parameters

You can cache the task outputs using the following parameters. Refer to Caching Task Outputs for a full guide.

| Parameter | Description | Required/ Optional |

|---|---|---|

| cacheConfig.ttlInSecond | The time to live in seconds, which is the duration for the output to be cached. | Required if using cacheConfig. |

| cacheConfig.key | The cache key is a unique identifier for the cached output and must be constructed exclusively from the task’s input parameters. It can be a string concatenation that contains the task’s input keys, such as ${uri}-${method} or re_${uri}_${method}. | Required if using cacheConfig. |

Other generic parameters

Here are other parameters for configuring the task behavior.

| Parameter | Description | Required/ Optional |

|---|---|---|

| optional | Whether the task is optional. If set to true, any task failure is ignored, and the workflow continues with the task status updated to COMPLETED_WITH_ERRORS. However, the task must reach a terminal state. If the task remains incomplete, the workflow waits until it reaches a terminal state before proceeding. | Optional. |

Task configuration

This is the task configuration for an LLM Chat Complete task.

{

"name": "llm_chat_complete",

"taskReferenceName": "llm_chat_complete_ref",

"inputParameters": {

"llmProvider": "openAI",

"model": "chatgpt-4o-latest",

"instructions": "<AI-PROMPT>",

"messages": [

{

"role": "user",

"message": "${workflow.input.someParameter}"

}

],

"temperature": 0.2,

"topP": 0.9,

"jsonOutput": false,

"promptVariables": {

"prDescription": "${workflow.input.someParameter}"

}

},

"type": "LLM_CHAT_COMPLETE"

}

Task output

The LLM Chat Complete task will return the following parameters.

| Parameter | Description |

|---|---|

| result | The completed chat by the LLM. |

| finishReason | Indicates why the text generation stopped. Common values include STOP when the model completes naturally. |

| tokenUsed | Total number of tokens consumed for the request, including both prompt and completion tokens. |

| promptTokens | Number of tokens used to process the prompt. |

| completionTokens | Number of tokens generated by the model in the output text returned by the task. |

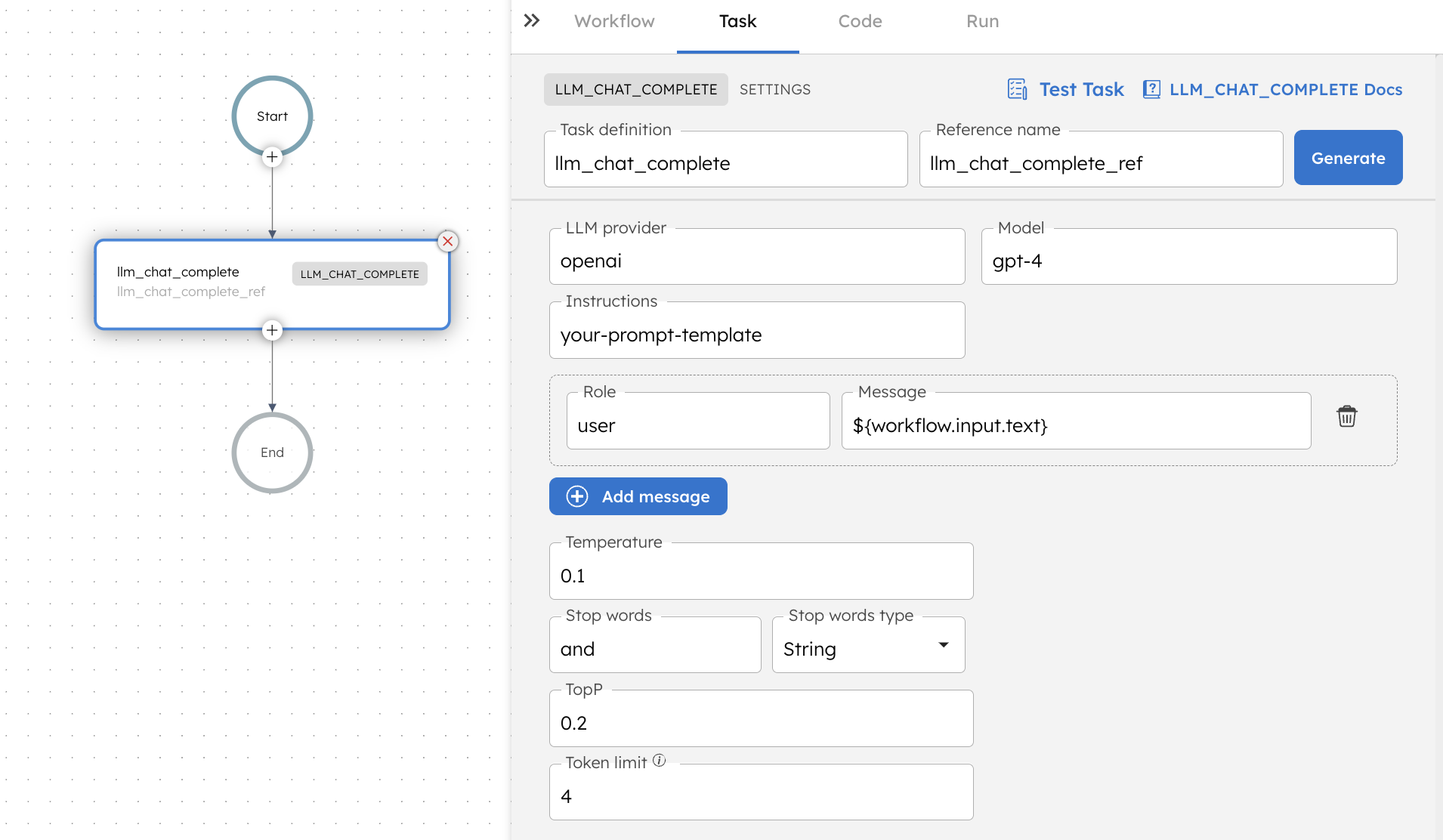

Adding an LLM Text Complete task in UI

To add an LLM Text Complete task:

- In your workflow, select the (+) icon and add an LLM Text Complete task.

- In Provider and Model, select the LLM provider, and Model.

- In Prompt and Variables, select the Prompt Name.

- (Optional) Select +Add variable to provide the variable path if your prompt template includes variables.

- (Optional) In Structured Message, select +Add message and choose the appropriate role and messages to complete the chat query.

- (Optional) In Fine Tuning, set the parameters Temperature, TopP, Token Limit, Stop words, and Stop word type.

- (Optional) In Output Format, toggle on Enable JSON Output to format the LLM’s response as a structured JSON.

Examples

Here are some examples for using the LLM Chat Complete task.

Using an LLM Chat Complete task in a workflow

See an example of building a pull request summary workflow.