Ollama Integration with Orkes Conductor

- v5.2.0 and later

You can use this integration in the following scenarios:

- Conductor is running locally, and Ollama is running locally.

- Conductor is running in the cloud, and your AI models are hosted on a server accessible to Ollama.

To use system AI tasks in Orkes Conductor, you must integrate your Conductor cluster with the necessary AI/LLM providers. This guide explains how to integrate Ollama with Orkes Conductor. Here’s an overview:

- Set up the Ollama app locally.

- Configure a new Ollama integration in Orkes Conductor.

- Add models to the integration.

- Set access limits to the AI model to govern which applications or groups can use it.

Step 1: Set up the Ollama app locally

To integrate Ollama with Orkes Conductor, first download and run Ollama locally on your device.

To run Ollama locally:

- Download and install the Ollama app.

- Open the app on your device.

- Choose the model you want to run from the list of supported Ollama models. You should have at least 8 GB of RAM available to run the 7B models, 16 GB to run the 13B models, and 32 GB to run the 33B models.

- Pull the model locally with the following command.

ollama pull <MODEL-NAME>

Example:

ollama pull mistral

This downloads the model to your device.

- Once downloaded, run the model using the command:

ollama run <MODEL-NAME>

- Enter prompts in the terminal to verify that the model runs locally.

The default local API endpoint for Ollama is http://localhost:11434. Open this URL in a browser to confirm that it displays “Ollama is running”.

If your Conductor cluster is running in the cloud, you must host Ollama on a server accessible to that cloud environment. The server must allow network access from the Conductor cluster to the Ollama API endpoint.

Step 2: Add an integration for Ollama

After running Ollama locally, add an Ollama integration to your Conductor cluster.

To create an Ollama integration:

- Go to Integrations from the left navigation menu on your Conductor cluster.

- Select + New integration.

- In the AI/LLM section, choose Ollama.

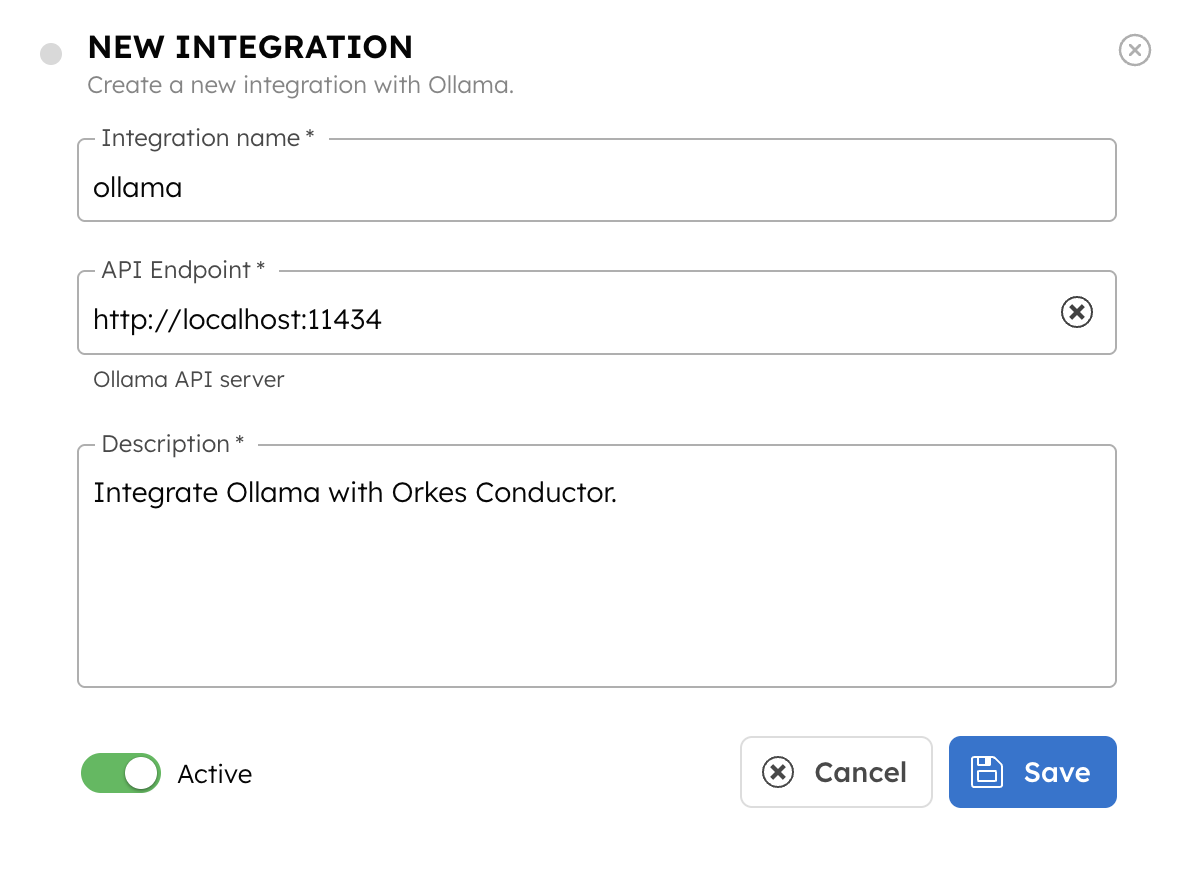

- Select + Add and enter the following parameters:

| Parameters | Description | Required / Optional |

|---|---|---|

| Integration name | A name for the integration. | Required. |

| API Endpoint | API server where Ollama is running, typically http://localhost:11434. | Required. |

| Auth Header Name Available since v5.2.6 and later | The name of the authentication header to include in requests. The name of the authentication header to include in requests. Required only when Ollama is exposed behind a secured endpoint or proxy. | Optional. |

| Auth Header Available since v5.2.6 and later | The authentication value associated with the header. | Optional. |

| Description | A description of the integration. | Required. |

- (Optional) Toggle the Active button off if you don’t want to activate the integration instantly.

- Select Save.

Step 3: Add Ollama models

Once you’ve integrated Ollama, the next step is to configure specific models. Add the model that you have set up on your local device.

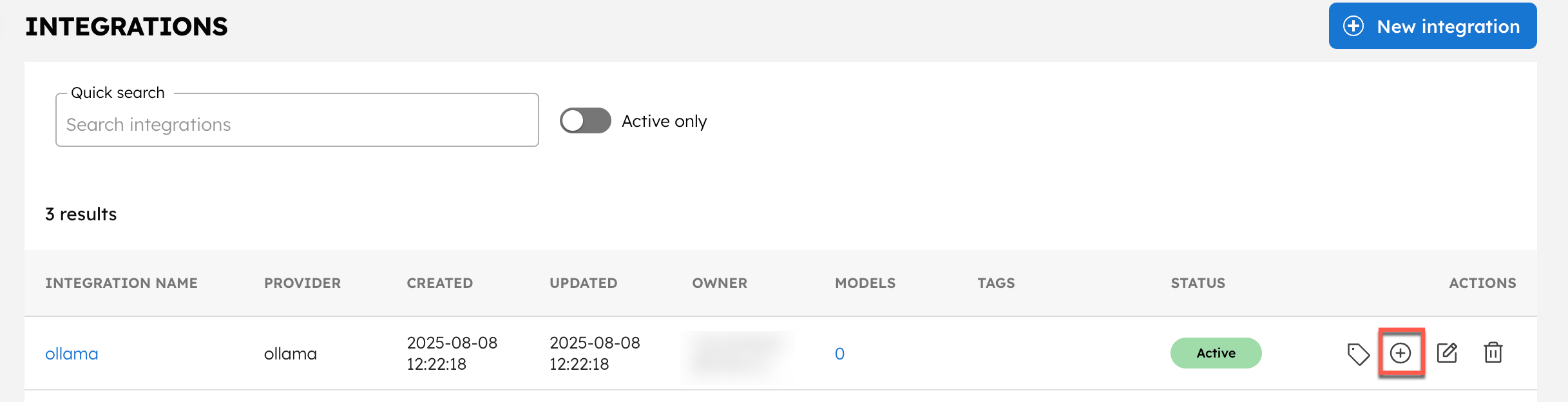

To add a model to the Ollama integration:

- Go to the Integrations and select the + button next to the integration created.

- Select + New model.

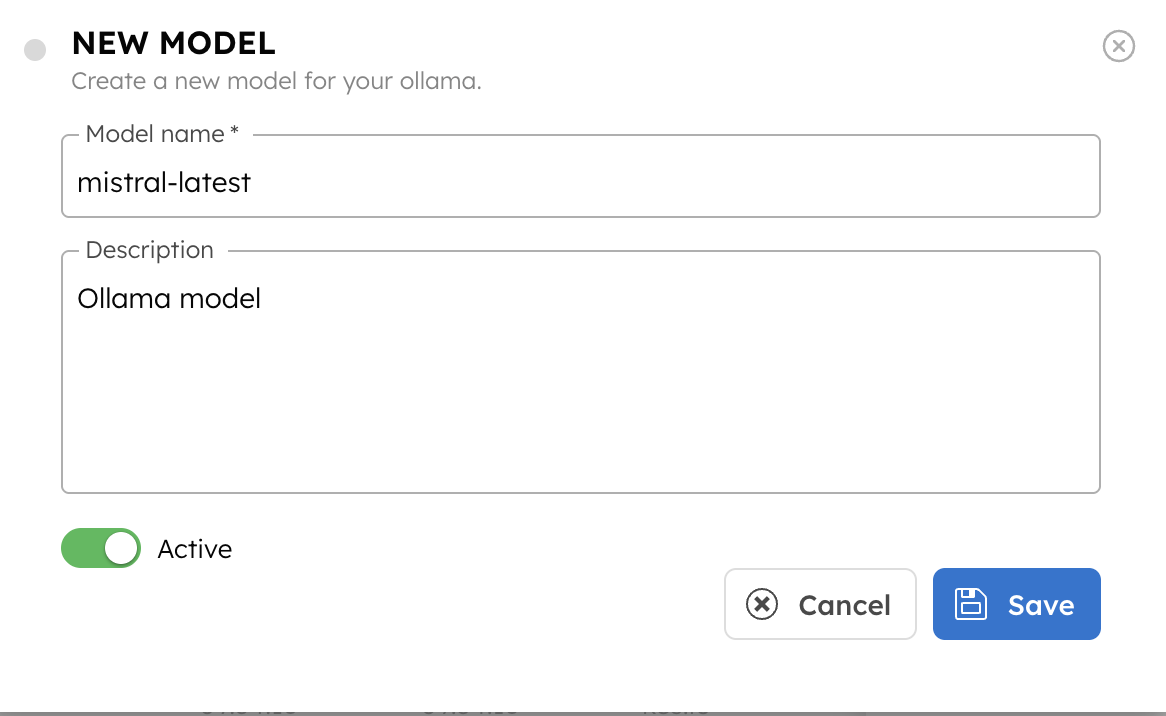

- Enter the Model name. The model name must exactly match the Ollama model identifier locally set on the device, including any version or tag (for example, mistral or mistral-latest).

- Provide a Description.

- (Optional) Toggle the Active button off if you don’t want to activate the model instantly.

- Select Save.

This saves the model for future use in AI tasks within Orkes Conductor.

Step 4: Set access limits to integration

Once the integration is configured, set access controls to manage which applications or groups can use the models.

To provide access to an application or group:

- Go to Access Control > Applications or Groups from the left navigation menu on your Conductor cluster.

- Create a new group/application or select an existing one.

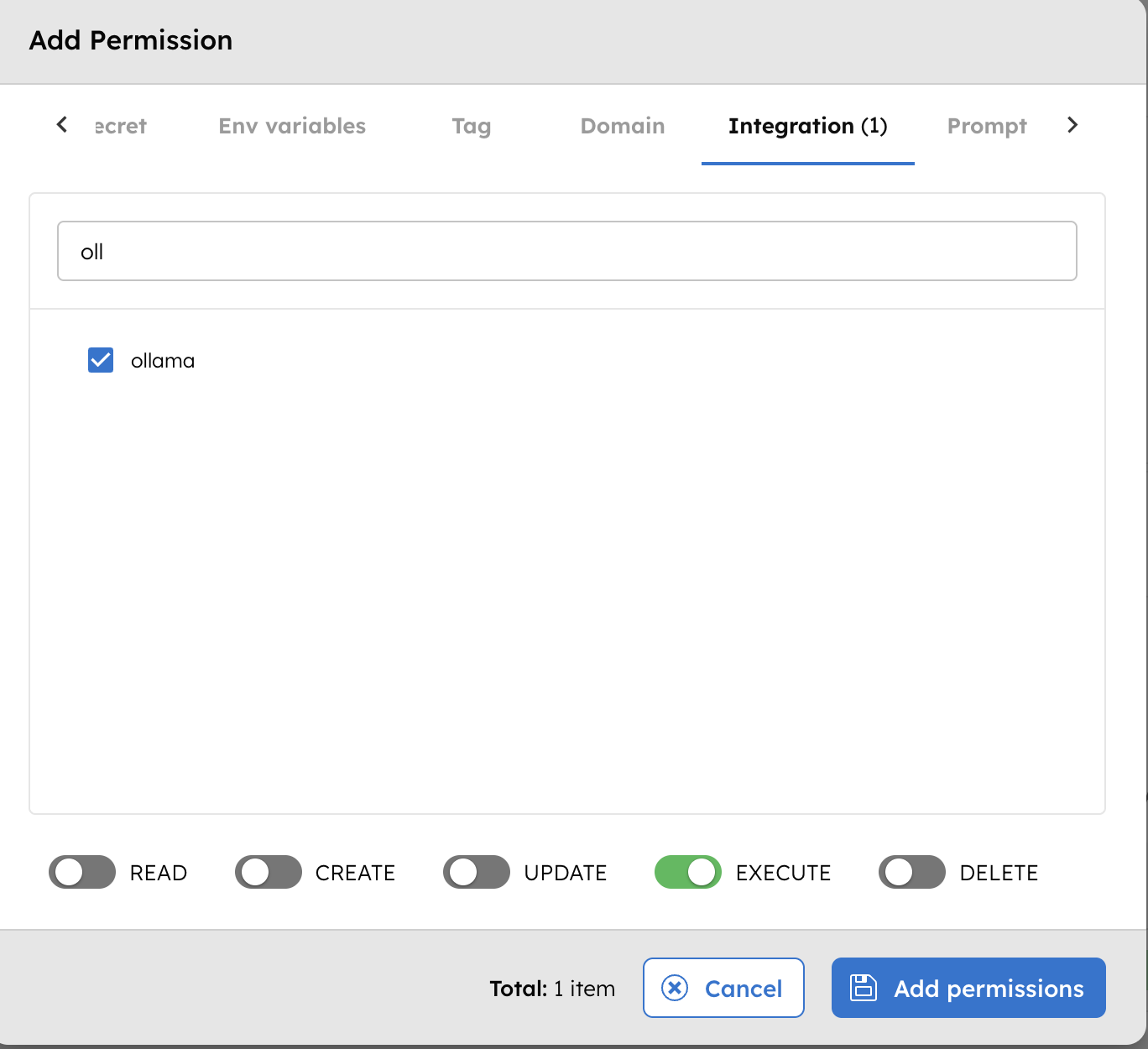

- In the Permissions section, select + Add Permission.

- In the Integration tab, select the required AI models and toggle the necessary permissions.

- Select Add Permissions.

The group or application can now access the AI model according to the configured permissions.

With the integration in place, you can now create workflows using AI/LLM tasks.