Apache Kafka Integration with Orkes Conductor

To use the Event task, Event Handler, or enable Change Data Capture (CDC) in Orkes Conductor, you must integrate your Conductor cluster with the necessary message brokers. This guide explains how to integrate self-managed Apache Kafka, Amazon MSK, or Confluent Kafka clusters with Orkes Conductor to publish and receive messages from topics. Here’s an overview:

- Get the required credentials from Kafka.

- Configure a new Apache Kafka integration in Orkes Conductor.

- Set access limits to the message broker to govern which applications or groups can use it.

Step 1: Get the Kafka credentials

To integrate Kafka with Orkes Conductor, retrieve the following credentials from the Kafka cluster:

- API keys

- Bootstrap server

- Schema registry URL (Only if integrating with a Schema registry for AVRO protocol)

- Consumer Group ID

The configuration steps vary depending on the type of Kafka cluster to be integrated.

- Get Apache Kafka credentials

- Get Confluent Kafka credentials

- Get Amazon MSK credentials

Set up Apache Kafka locally and retrieve the following credentials:

- Bootstrap server

- API key and secret

To integrate Confluent Kafka with Orkes Conductor, retrieve the following credentials from the Confluent Cloud portal:

- API keys

- Bootstrap server

- Schema registry URL (Only if integrating with a Schema registry for AVRO protocol)

- Consumer Group ID

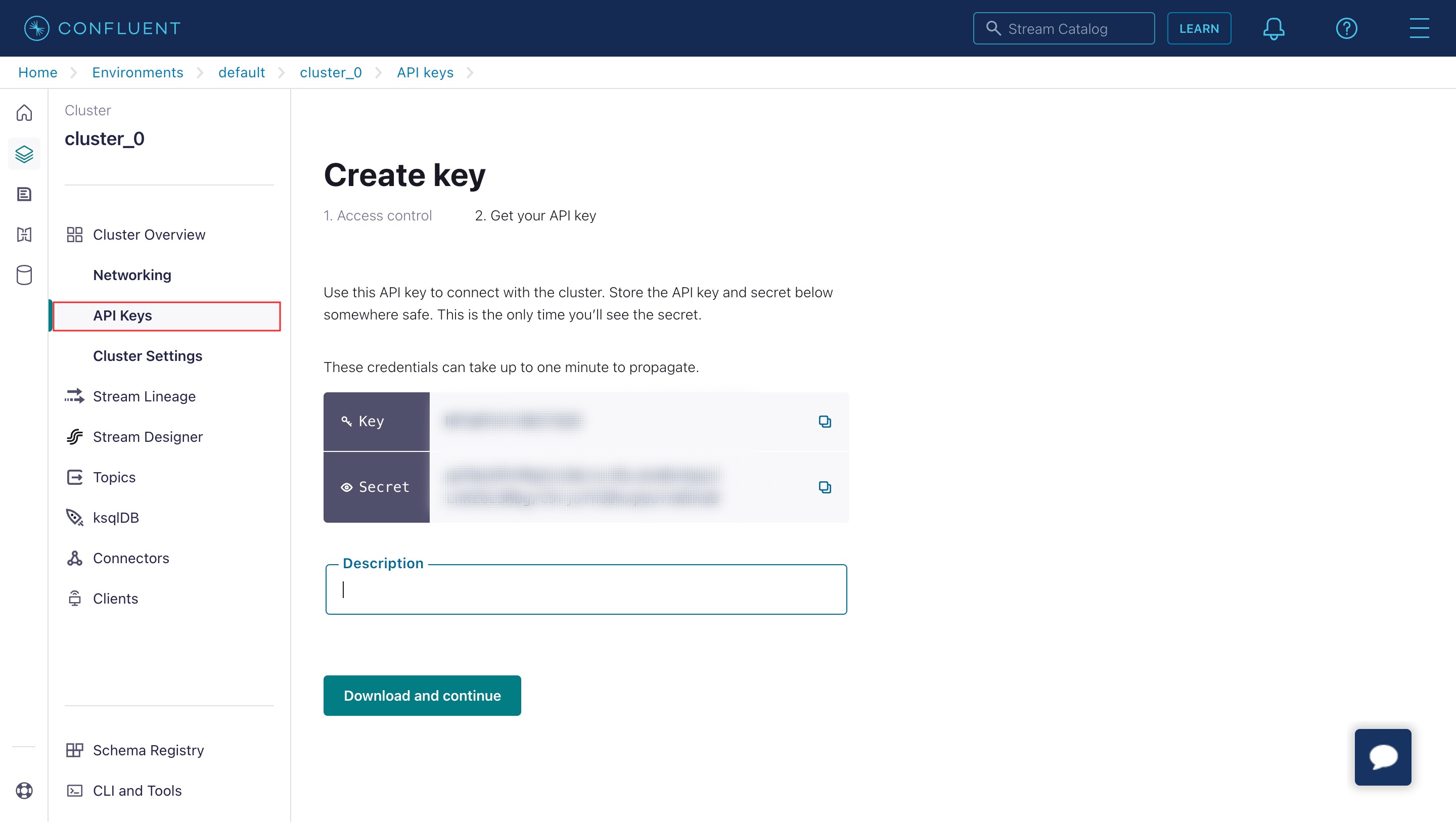

To retrieve the API keys:

- Sign in to the Confluent Cloud portal.

- Select the Confluent cluster to integrate with Orkes Conductor.

- Go to Cluster Overview > API Keys from the left navigation menu.

- Select Create Key > + Add key.

- Choose either Global access or Granular access.

- Copy and store the Key and Secret.

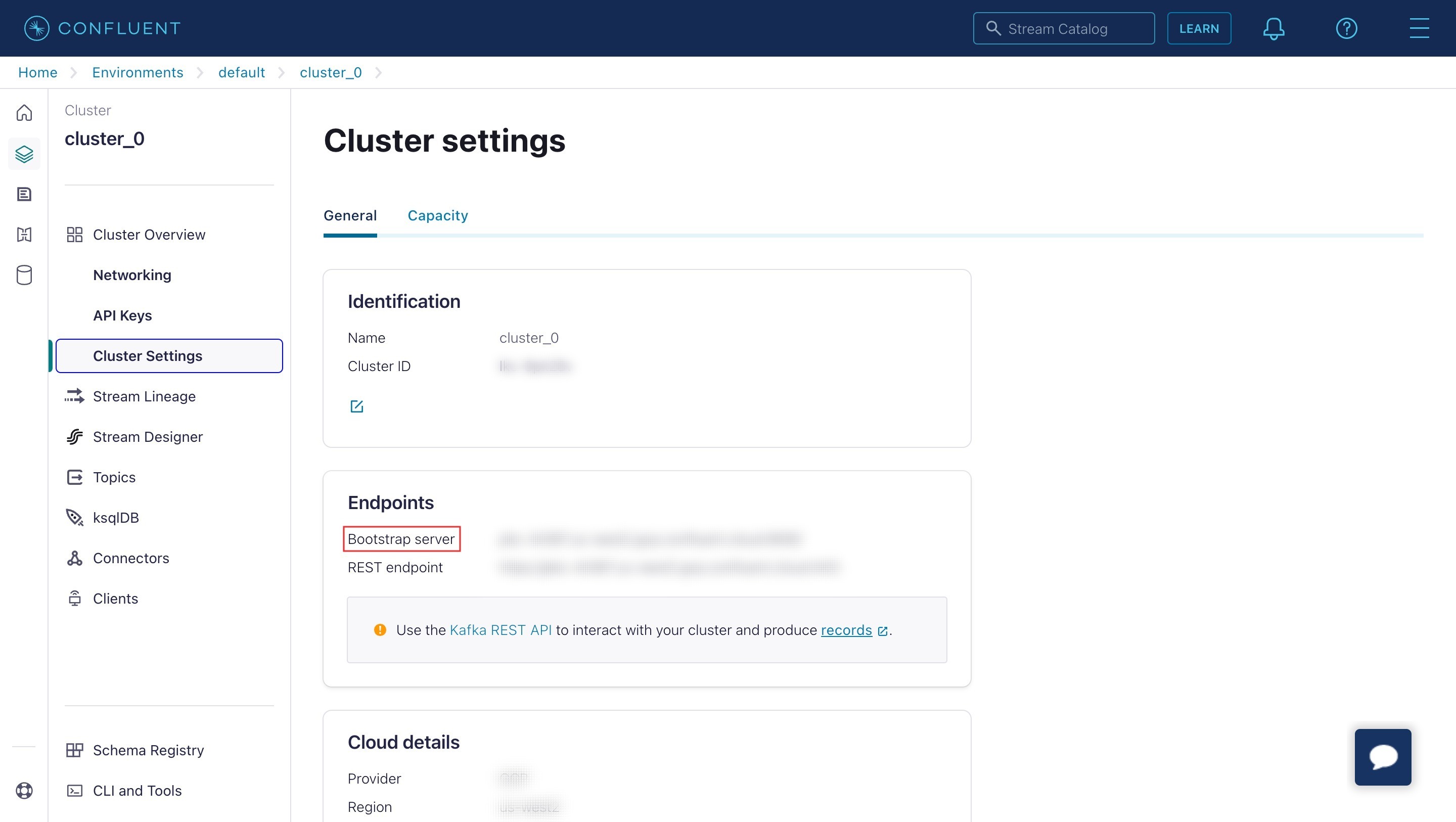

To retrieve the Bootstrap server:

- Sign in to the Confluent Cloud portal.

- Go to Cluster Overview > Cluster Settings > Endpoints.

- Copy the Bootstrap server.

The Schema registry server, API key, and secret are only required if you are integrating with a schema registry (for AVRO protocol).

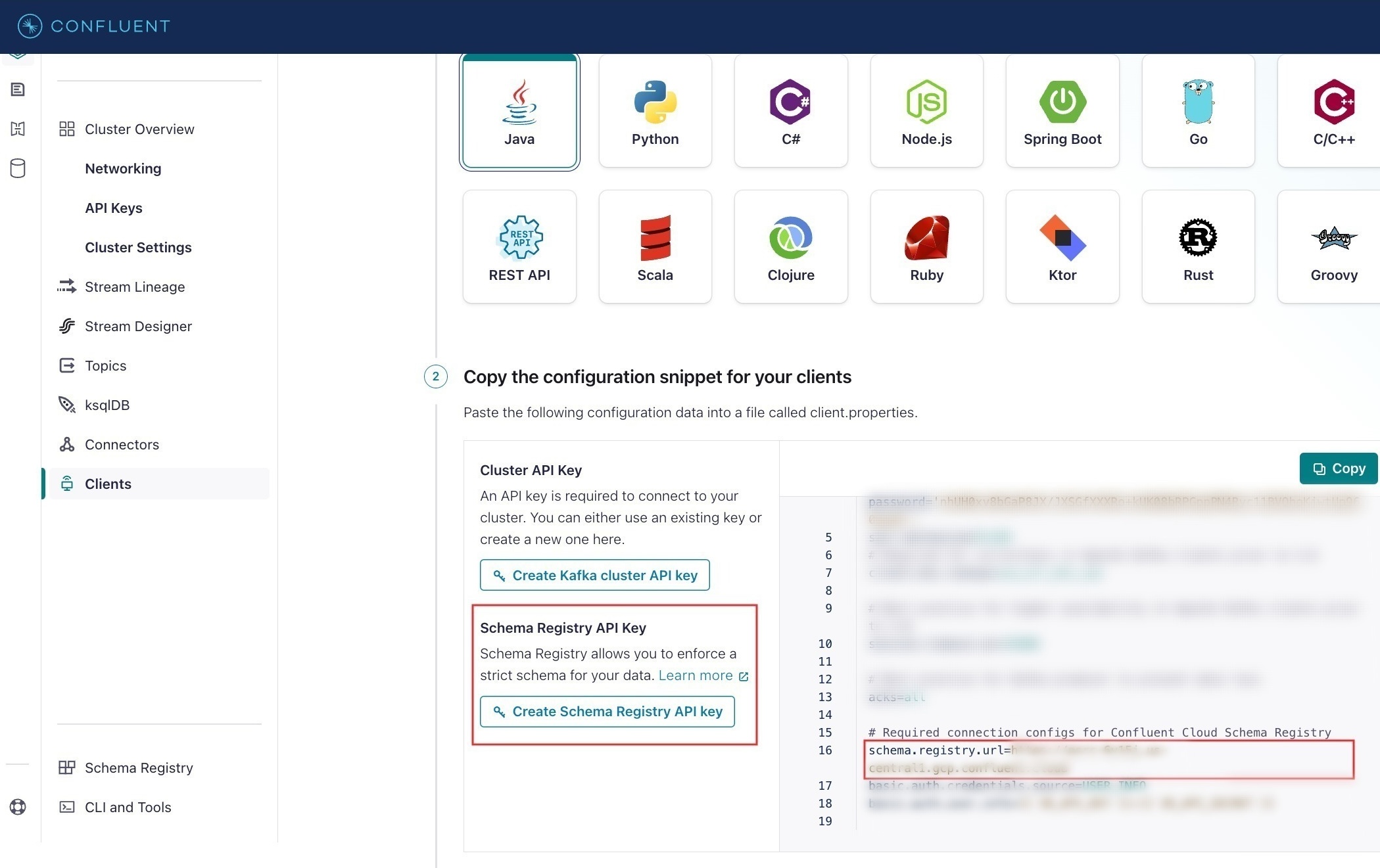

To get the Schema registry server and API keys:

- Sign in to the Confluent Cloud portal.

- Go to Clients > Add new client.

- In Copy the configuration snippet for your clients > schema.registry.url, copy the URL.

- Select Create Schema Registry API key to download the file. The downloaded file will have the Schema Registry API key and secret.

To integrate Amazon MSK with Orkes Conductor, retrieve the following credentials from your Amazon MSK console:

- Bootstrap server

- Username and Password

- Consumer Group ID

Refer to the official Amazon MSK documentation for more details.

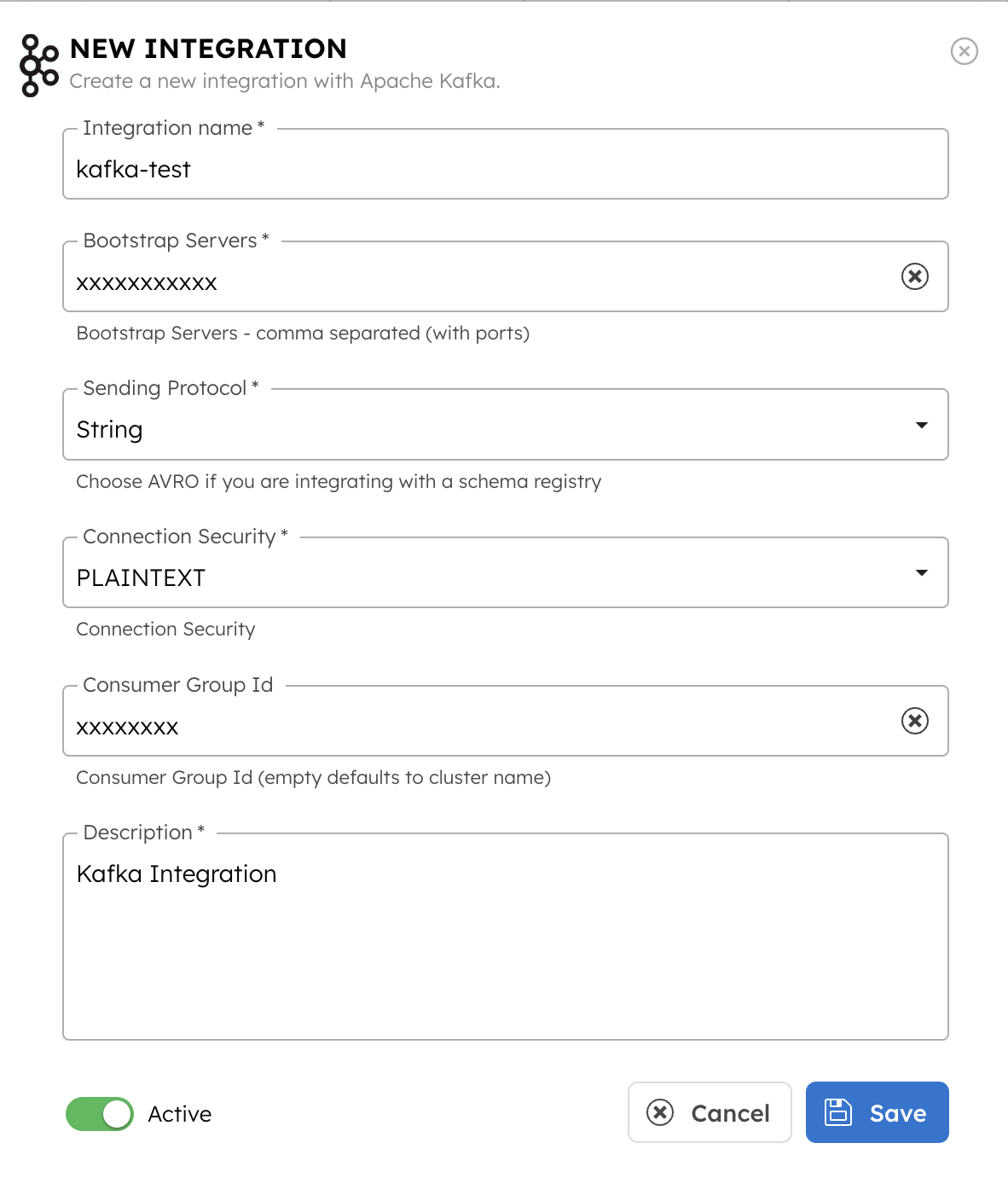

Step 2: Add an integration for Apache Kafka

After obtaining the credentials, add an Apache Kafka integration to your Conductor cluster.

To create an Apache Kafka integration:

- Go to Integrations from the left navigation menu on your Conductor cluster.

- Select + New integration.

- In the Message Broker section, choose Apache Kafka.

- Select + Add and enter the following parameters:

| Parameters | Description | Required / Optional | Notes |

|---|---|---|---|

| Integration name | A name for the integration. | Required. | – |

| Bootstrap Server | The bootstrap server of the Kafka cluster. | Required. | – |

| Sending Protocol | The sending protocol for the integration. Supported values:

| Required. | AVRO protocol is not supported for Amazon MSK clusters. |

| Connection Security | The security mechanism for connecting to the Kafka cluster. Supported values:

| Required. |

|

| Username | The username to authenticate with the Kafka cluster. For AVRO configuration, use the API key copied from Step 1 as the username. | Required (except for PLAINTEXT) | – |

| Password | The password associated with the username. For AVRO configuration, use the API secret copied from Step 1 as the password. | Required (except for PLAINTEXT) | – |

| Truststore type | If SSL encryption is enabled, select the trust store type. Supported values:

| Required for connection types SASL_SSL / PLAIN and SASL_PLAINTEXT. | Not supported for Amazon MSK clusters. |

| Trust Store Password | The password for the trust store. | Required if Truststore type is JKS. | Not supported for Amazon MSK clusters. |

| Select Sasl mechanism | The SASL mechanism to connect to the Kafka cluster. Supported values:

| Required if Connection Security is SASL_PLAINTEXT. | – |

| Schema Registry URL | The Schema Registry URL copied from the Kafka console. | Required if Sending Protocol is AVRO. | Not supported for Amazon MSK clusters. |

| Schema Registry Auth Type | The authentication mechanism for connecting to the schema registry. Supported values:

| Required if Sending Protocol is AVRO. | Not supported for Amazon MSK clusters. |

| Schema Registry API Key | The schema registry API key obtained from the schema registry server. | Required if

| Not supported for Amazon MSK clusters. |

| Schema Registry API Secret | The schema registry API secret obtained from the schema registry server. | Required if

| Not supported for Amazon MSK clusters. |

| Value Subject Name Strategy | The strategy for constructing the subject name under which the AVRO schema will be registered in the schema registry. Supported values:

| Required if Sending Protocol is AVRO. | Not supported for Amazon MSK clusters. |

| Consumer Group ID | The Consumer Group ID from Kafka. This unique identifier helps manage message processing, load balancing, and fault tolerance within consumer groups. | Required. | – |

| Description | A description of the integration. | Required. | – |

- (Optional) Toggle the Active button off if you don’t want to activate the integration instantly.

- Select Save.

Step 3: Set access limits to integration

Once the integration is configured, set access controls to manage which applications or groups can use the message broker.

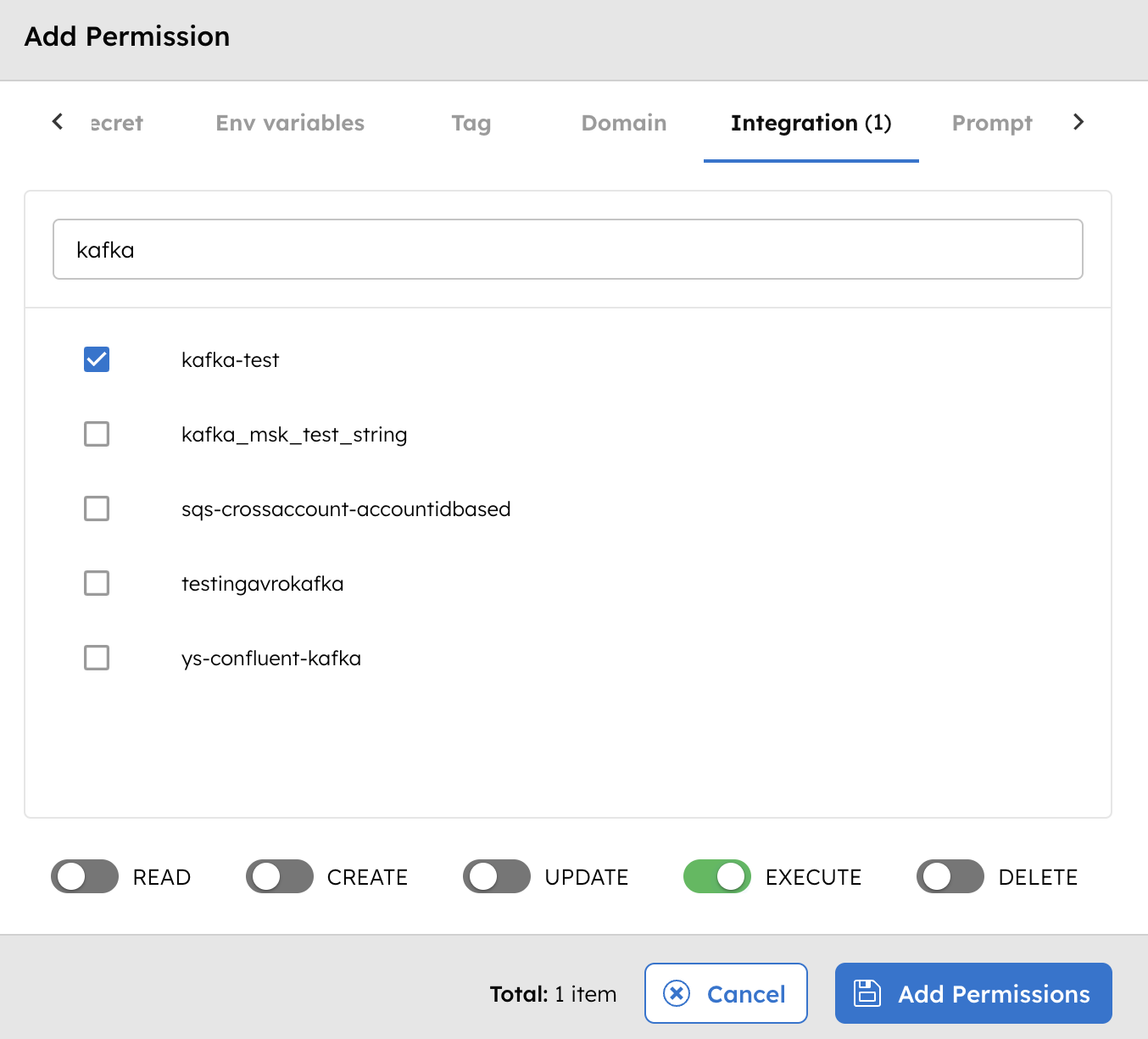

To provide access to an application or group:

- Go to Access Control > Applications or Groups from the left navigation menu on your Conductor cluster.

- Create a new group/application or select an existing one.

- In the Permissions section, select + Add Permission.

- In the Integration tab, select the required message broker and toggle the necessary permissions.

The group or application can now access the message broker according to the configured permissions.

Next steps

With the integration in place, you can now:

- Create Event Handlers.

- Configure Event tasks.

- Enable Change Data Capture (CDC) to send workflow state changes to message brokers.