LLM Generate Embeddings

The LLM Generate Embeddings task is used to convert input text into a sequence of vectors, also known as embeddings. These embeddings are processed versions of the input text and can be stored in a vector database for later retrieval. This task utilizes a previously integrated language model (LLM) to generate the embeddings.

The LLM Generate Embeddings task takes the input text and processes it through the selected language model (LLM) to produce embeddings. The task evaluates the specified parameters, such as the LLM provider and model, and generates a corresponding sequence of vectors for the provided text. The output is a JSON array containing these vectors, which can be used in subsequent tasks or stored for future use.

- Integrate the required AI model with Orkes Conductor.

Task parameters

Configure these parameters for the LLM Generate Embeddings task.

| Parameter | Description | Required/ Optional |

|---|---|---|

| inputParameters.llmProvider | The integration name of the LLM provider integrated with your Conductor cluster. Note: If you haven’t configured your AI/LLM provider on your Orkes Conductor cluster, go to the Integrations tab and configure your required provider. | Required. |

| inputParameters.model | The available language models within the selected LLM provider. For example, If your LLM provider is Azure Open AI and you’ve configured text-davinci-003 as the language model, you can select it here. | Required. |

| inputParameters.text | The text to be converted and stored as a vector. It can also be passed as variables. | Required. |

| inputParameters.dimensions | The size of the vector, which is the number of elements in the vector. | Optional. |

The following are generic configuration parameters that can be applied to the task and are not specific to the LLM Generate Embeddings task.

Caching parameters

You can cache the task outputs using the following parameters. Refer to Caching Task Outputs for a full guide.

| Parameter | Description | Required/ Optional |

|---|---|---|

| cacheConfig.ttlInSecond | The time to live in seconds, which is the duration for the output to be cached. | Required if using cacheConfig. |

| cacheConfig.key | The cache key is a unique identifier for the cached output and must be constructed exclusively from the task’s input parameters. It can be a string concatenation that contains the task’s input keys, such as ${uri}-${method} or re_${uri}_${method}. | Required if using cacheConfig. |

Other generic parameters

Here are other parameters for configuring the task behavior.

| Parameter | Description | Required/ Optional |

|---|---|---|

| optional | Whether the task is optional. If set to true, any task failure is ignored, and the workflow continues with the task status updated to COMPLETED_WITH_ERRORS. However, the task must reach a terminal state. If the task remains incomplete, the workflow waits until it reaches a terminal state before proceeding. | Optional. |

Task configuration

This is the task configuration for an LLM Generate Embeddings task.

{

"name": "llm_generate_embeddings",

"taskReferenceName": "llm_generate_embeddings_ref",

"inputParameters": {

"llmProvider": "openAI",

"model": "chatgpt-4o-latest",

"text": "${workflow.input.text}",

"dimensions": 3024

},

"type": "LLM_GENERATE_EMBEDDINGS"

}

Task output

The LLM Generate Embeddings task will return the following parameters.

| Parameter | Description |

|---|---|

| result | A JSON array containing the vectors of the indexed data. |

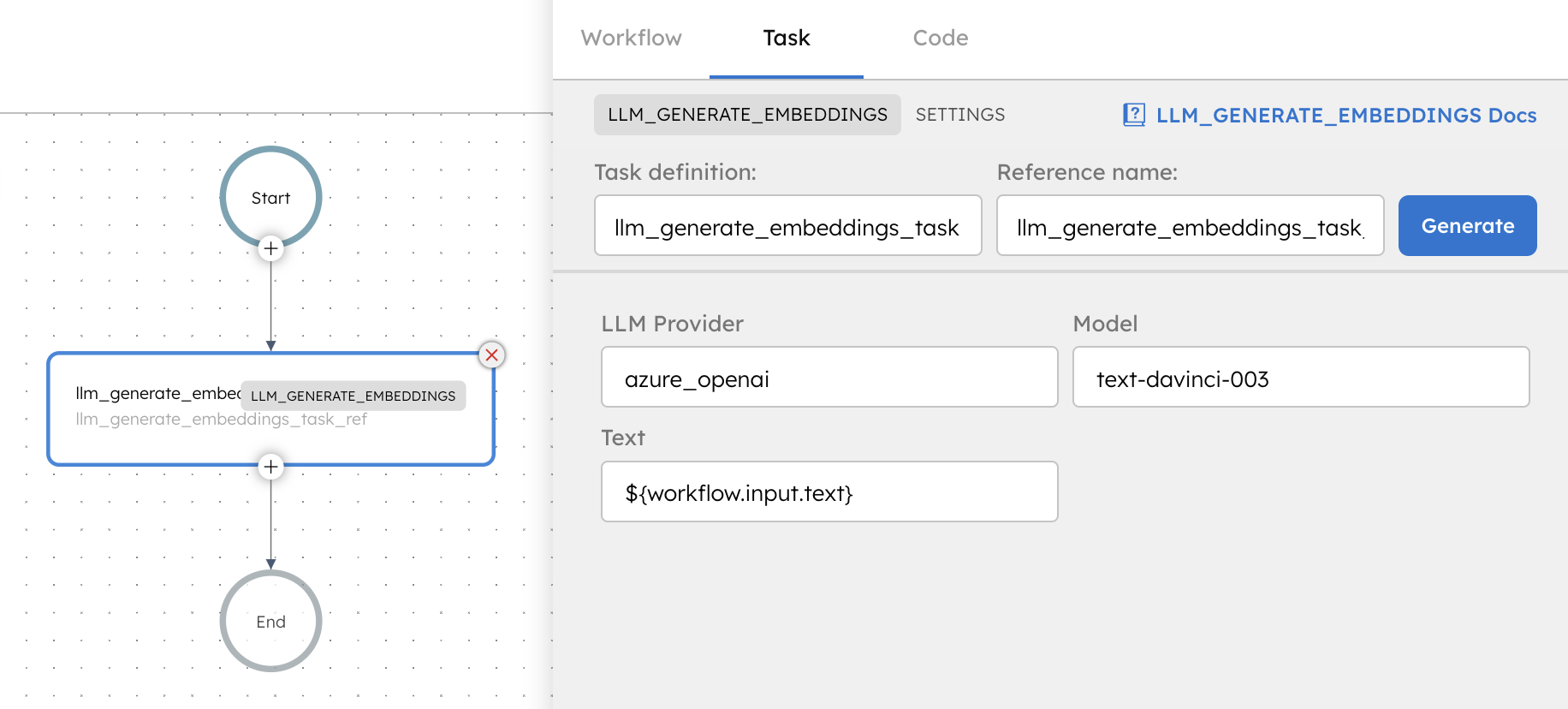

Adding an LLM Generate Embeddings task in UI

To add an LLM Generate Embeddings task:

- In your workflow, select the (+) icon and add an LLM Generate Embeddings task.

- In Provider and Model, select the LLM provider, and Model.

- In Embedding Configuration, enter the Text, and Dimensions.

Examples

Here are some examples for using the LLM Generate Embeddings task.

Using an LLM Generate Embeddings task in a workflow

See an example of building a question answering workflow using stored embeddings.