Featured Blog

Expedite Tesla Powerwall Payback Period with Automated Solar Production Curtailment During Negative Feed-In Pricing with Australia’s Amber Electric and Orkes

Automate Tesla Powerwall export curtailment during negative Amber pricing using Orkes, accelerating solar payback with zero manual effort....

ALL, AGENTIC, ENGINEERING, MCP

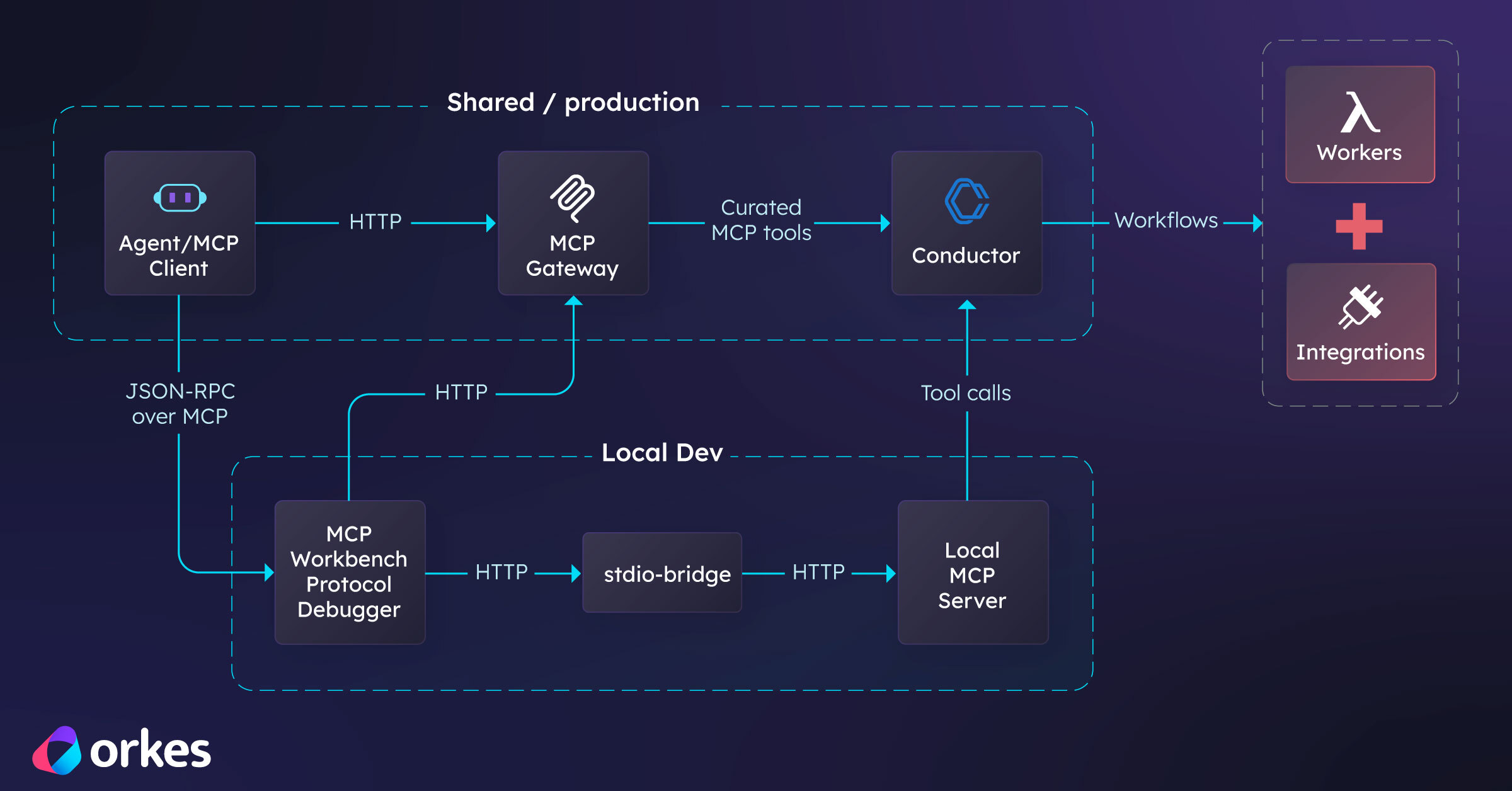

MCP Workbench: A Protocol Debugger for Tool-Using Agents

January 23, 2026

A technical walkthrough of MCP, why debugging MCP matters, how MCP Workbench is built to help, and a practical getting-started project you can validat...

ALL, AGENTIC, ENGINEERING, SOLUTIONS

Technical Guide: Orchestrating Your LangChain Agents for Production with Orkes Conductor

January 19, 2026

Learn how to orchestrate LangChain agents for production using Orkes Conductor with retries, human approval, and full observability....

ALL, AGENTIC, ANNOUNCEMENTS

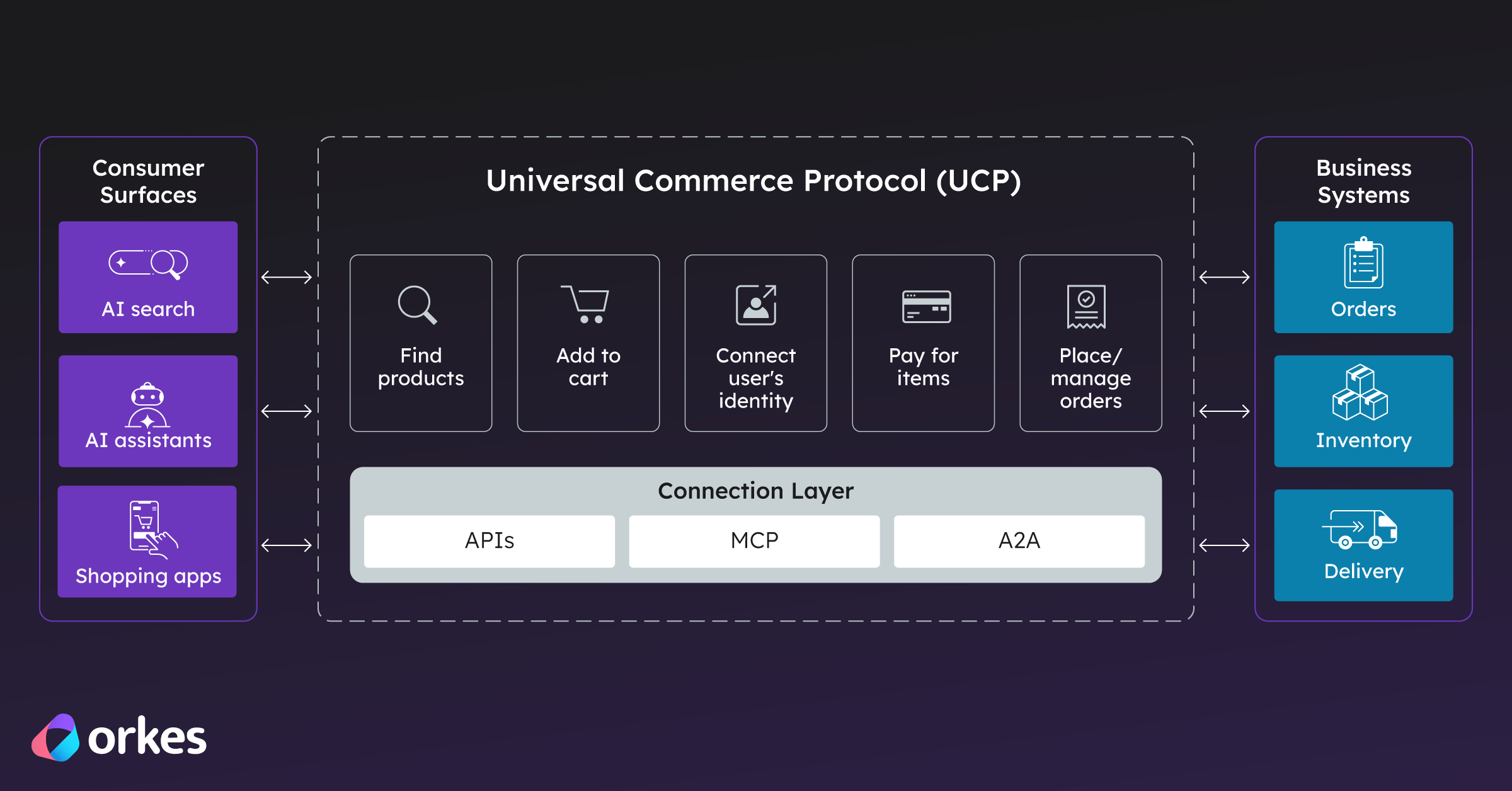

Universal Commerce Protocol (UCP) Explained: Beyond Google's Announcement

January 13, 2026

Universal Commerce Protocol (UCP) explained: what it is, how it works, and why it matters for the future of AI shopping....

ALL, AGENTIC, ANNOUNCEMENTS

GPT-5.2 Is Here—Now Put It to Work in Orkes Conductor

December 15, 2025

GPT-5.2 just shipped. Here’s how to plug it into Orkes Conductor and start running real agentic workflows (with guardrails, observability, and the fle...

.png)

ALL, AGENTIC, ENGINEERING

Build an AI-Powered Loan Risk Assessment Workflow (with Sub-Workflows + Human Review)

December 4, 2025

Step-by-step tutorial to build an AI-powered loan risk assessment workflow in Orkes Conductor using OCR, LLM scoring, sub-workflows, and human review....

ALL, AGENTIC, ENGINEERING

Enterprise Uptime Guardrails: Build a Website Health Checker Workflow (HTTP Checks + Inline Logic + SMS Alerts)

December 2, 2025

Step-by-step guide to building a Conductor workflow that monitors a website URL, evaluates health from HTTP status codes, and texts you via Twilio whe...

ALL, AGENTIC, ENGINEERING

Vector Databases 101: A Simple Guide for Building AI Apps with Conductor

November 26, 2025

Vector databases explained simply and how Conductor makes working with them simple and straightforward by integrating with popular vector databases li...

ALL, AGENTIC, ENGINEERING

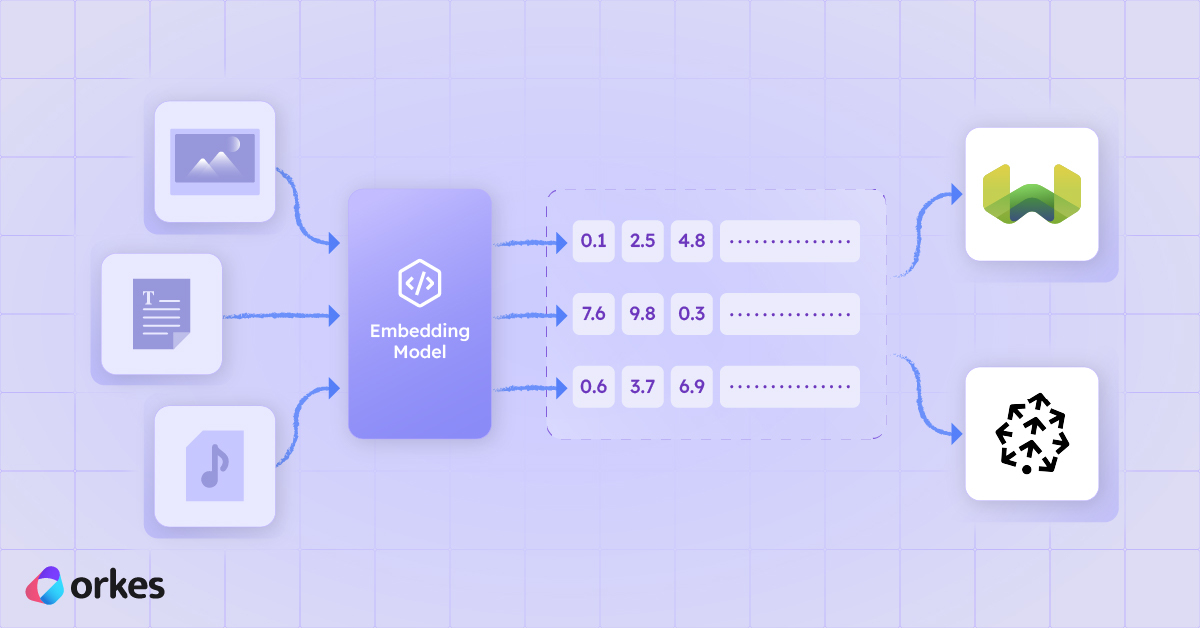

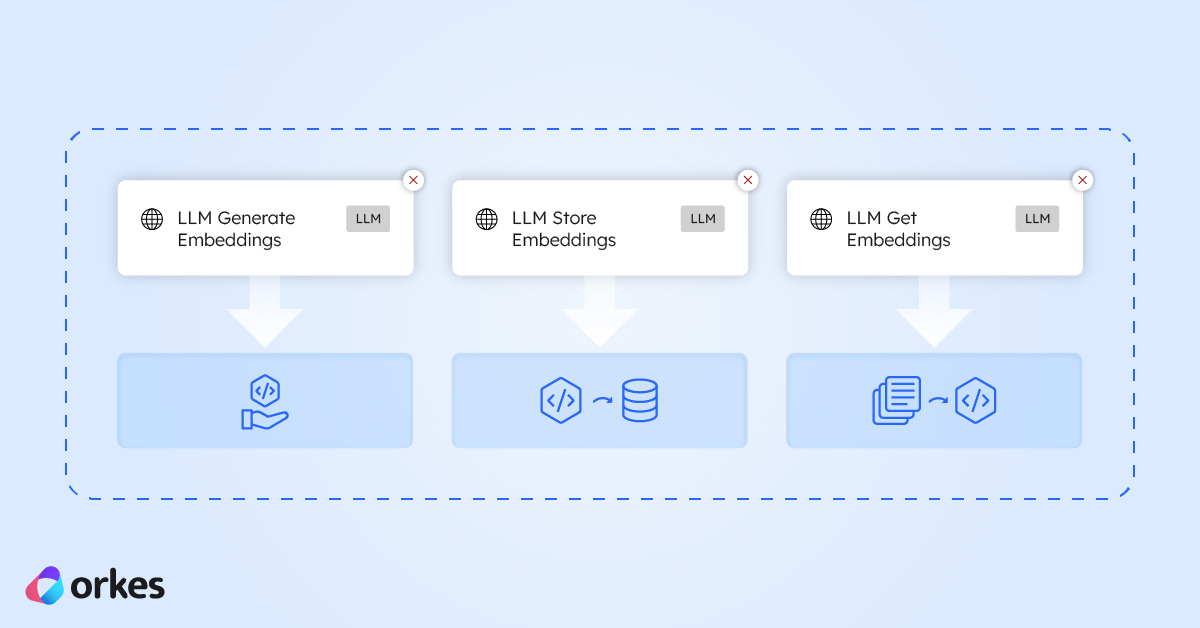

Orkes Conductor Embeddings Explained: The Tasks Behind Semantic Search & AI Workflows

November 25, 2025

Here’s a quick and easy rundown of how to use Orkes Conductor’s LLM embedding tasks to turn your text into vectors, store them in a database, and use ...

ALL, AGENTIC, ENGINEERING

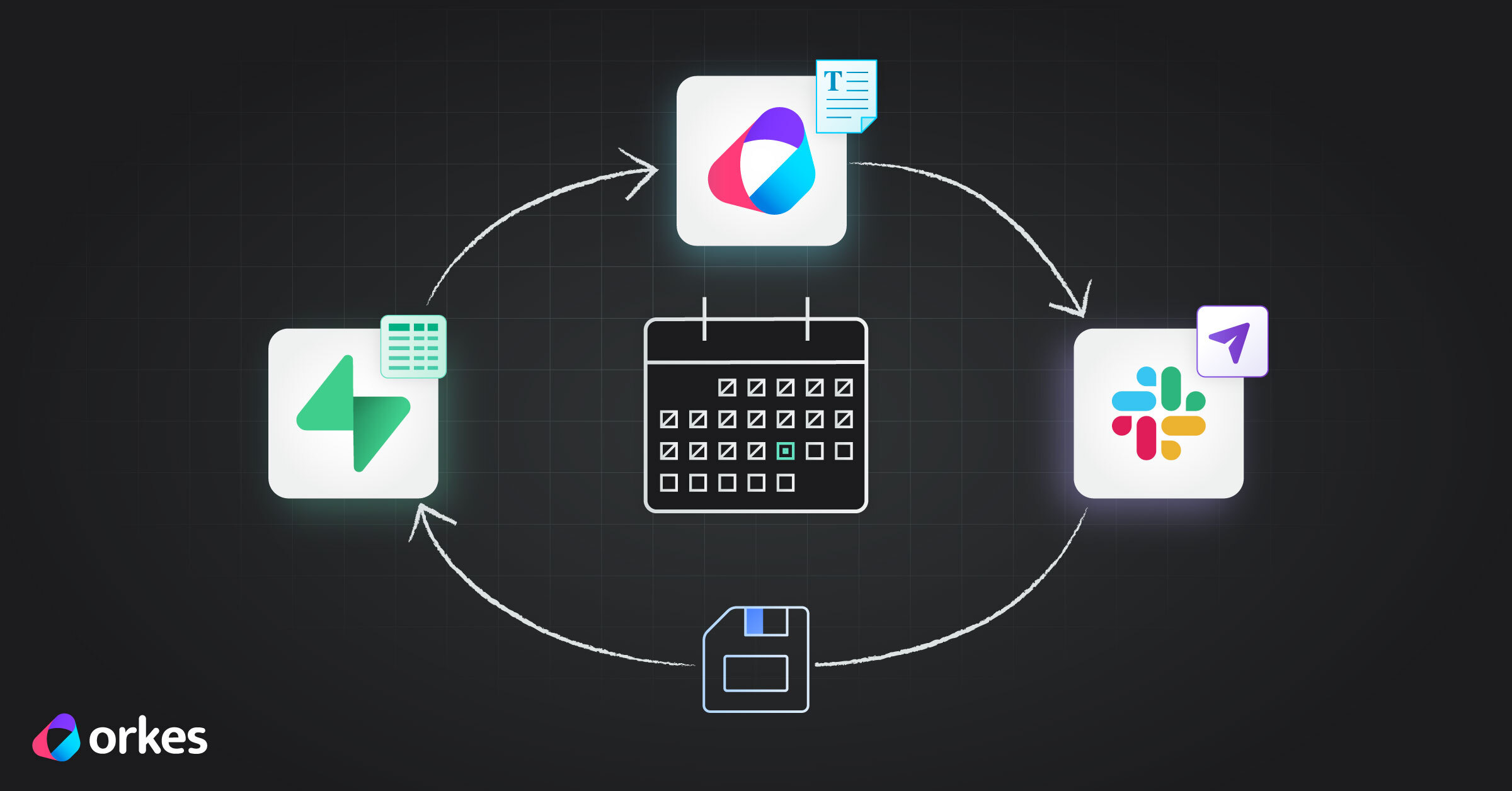

How to Orchestrate a Real Workflow Using Slack and Supabase (With Conductor Doing the Heavy Lifting)

November 19, 2025

If you've ever struggled to connect tools like Slack and Supabase into one smooth workflow, this guide shows how to orchestrate the whole process, wit...

- …

Ready to Build Something Amazing?

Join thousands of developers building the future with Orkes.